Building NeetoDeploy CLI

December 26, 2023

We are building NeetoDeploy, a compelling Heroku alternative. Stay updated by following NeetoDeploy on Twitter and reading our blog.

Building the CLI tool for NeetoDeploy became our top priority after we had built all the basic features in NeetoDeploy. Once we started migrating our apps from Heroku to NeetoDeploy, the need for a CLI tool arose, since previously developers were using Heroku CLI for their scripts etc.

We started building the CLI as a Ruby gem using Thor.

Installing the gem and using it would be as simple as running

gem install neetodeploy. We wanted the users to be authenticated via Neeto's

authentication system before they could do anything else with the CLI. For this,

we added a neetodeploy login command, which would create a session and

redirect you to the browser where you can log in. The CLI would be polling the

session login status, and once you have logged in, it would store the session

token and your email address inside the ~/.config/neetodeploy directory. This

will be used to authenticate your request when you run everything else.

The basic CLI functionality

The v1 release of the NeetoDeploy CLI shipped the following commands:

config: For listing, creating, and deleting environment variables for your apps.exec: For getting access to a shell running inside your deployed app.logs: For streaming the application logs right to your terminal.

Let's look at each of them in detail.

Setting environment vars

Building the config command was the most straightforward of the three. The

central source or truth for all the environment variables of the apps is stored

in the web dashboard of NeetoDeploy. There are APIs already in place for doing

CRUD operations on these from the dashboard app. This could be expanded for the

use of the CLI as well.

We first added API endpoints in the dashboard app, under a CLI namespace, to list, create, and delete config variables. We then added different commands in the CLI to send HTTP requests to the respective endpoints in the dashboard app.

You can pass the app name as an argument, and the CLI will send a request to the dashboard app along with the session token generated when you logged in. At the backend, this session token would be used to check if you have access to the app you're requesting, before doing anything else.

If you have used Heroku CLI, then you would be very comfortable since NeetoDeploy CLI follows the same style.

Architecting the neetodeploy exec command

The exec command is used to get access to a shell inside your deployed app.

The NeetoDeploy web dashboard already has a functioning console feature with

which you can get access to the shell inside your application’s dynos. This was

built using websockets. We would deploy a "console" pod with the same image and

environment variables as the app, to which we'll connect with websockets. We

have a dyno-console-manager app which spawns a new process inside the console

dyno and handles the websocket connections, etc. When we started building the

exec feature for the CLI however, we explored a few options and learnt a lot of

things before finally settling back on the websockets approach.

We’ve been using the kubectl exec command on a daily basis to get access to

shells inside pods running in the cluster. Since kubectl exec is cosmetically

similar to how we use SSH to access remote machines, the first approach we took

involved using SSH to expose containers outside the cluster.

We can expose our deployment outside the cluster using SSH in two ways. The

first approach would be running an SSH server as a

sidecar container

within the console pod. The second approach would be to bundle

sshd within the image for

the console pod using a custom buildpack. With either of these two methods, you

would be able to SSH into your console deployment if you have the correct SSH

keys. We ran sshd inside a pod using a

custom sshd buildpack. We then

configured the SSH public key inside the pod manually with kubectl exec. With

this, we were able to SSH into the pod after port forwarding the sshd process’s

port to localhost using kubectl port-forward.

Great! Now that the SSH connection works, the part of the puzzle that remained now was how we would actually expose the deployment outside the cluster in production. We tried doing this in a couple of different ways.

1. LoadBalancer

We were able to SSH into the pod after exposing it as a LoadBalancer service. This was out of the question for production however, since AWS has a hard limit on the number of application load balancers (ALB) we can create per region. We’d also have to pay for each load balancer we create. This approach wouldn’t scale at all.

2. NodePort

The next option was to expose the pod as a NodePort service. A NodePort

service in Kubernetes means that the pod would be exposed through a certain port

number through every node in the cluster. We could SSH into the pod with the

external IP of any of the nodes. We tried exposing the test console pod we

created with a NodePort service as well. We were able to SSH into the pod from

outside the cluster without having to do the port forward. There was one

limitation for using NodePorts that we knew would render this method suboptimal

for building a Platform as a Service. The range of ports that can be allocated

for NodePort services is from 30000 to 32768 by default. This means that we’d

only be able to run 2768 instances of neetodeploy exec at any given time in

our current cluster setup in EKS.

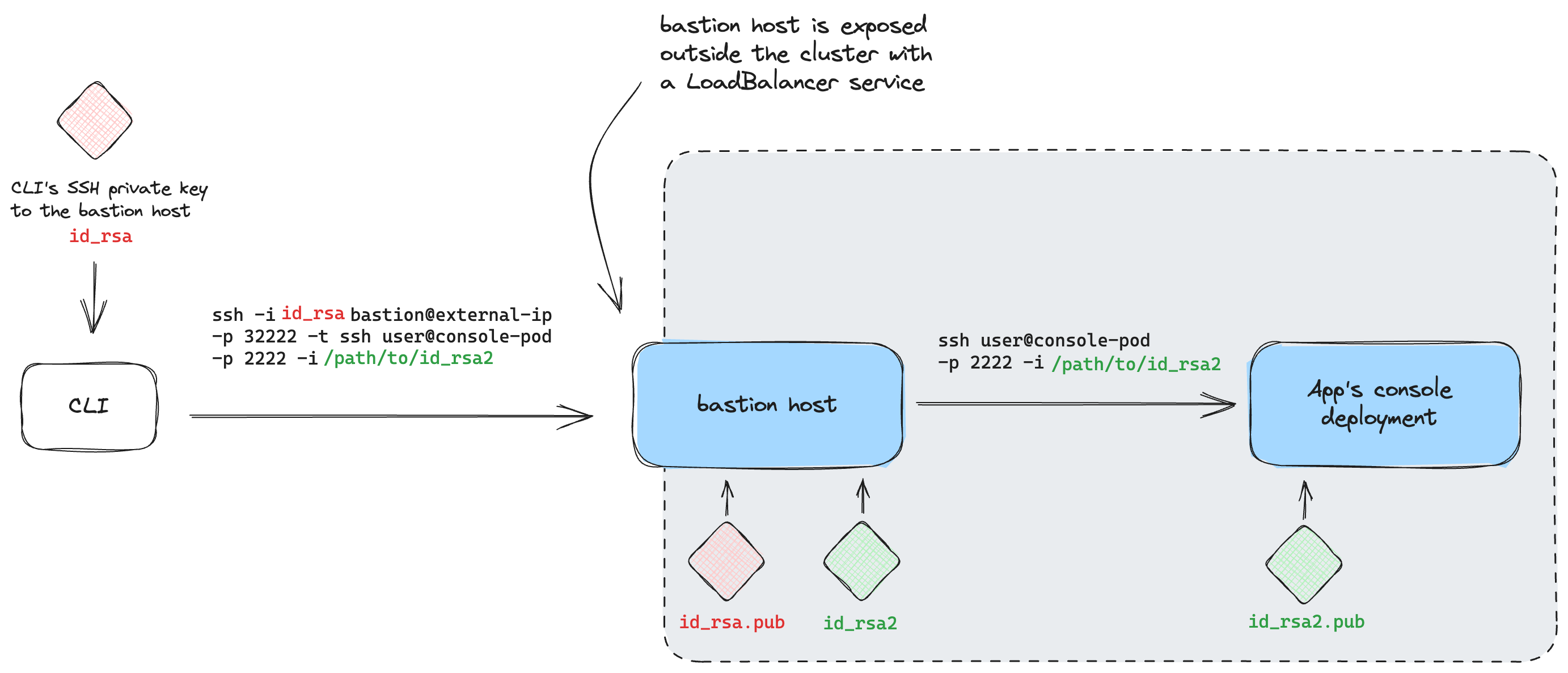

3. Bastion host, the SSH proxy server

We thought of deploying an SSH proxy server to circumvent the hard limits on the LoadBalancer and NodePort approaches. Such proxy servers are usually called bastion hosts or jump servers. These are servers that are designed to provide access to a private network from a public network. The name "bastion" comes from the military structure that projects outwards from a castle or a fort. In a similar sense, our bastion host will be a Kubernetes deployment that is exposed to the public network and acts as an interface between our private cluster and the public internet. For our requirement, we can proxy SSH connections to console pods through this bastion host deployment. We only needed to expose the bastion host as a single LoadBalancer or a NodePort service, to which the CLI can connect. This would solve the issue of having a hard limit on the number of LoadBalancer or NodePort services we can create.

We quickly set up a bastion host and tested the whole idea. It was working seamlessly! However, there were a lot of edge cases we'd have to handle if we are going to release this to the public. Ideally, we would want a new pair of SSH keys for each console pod. We could generate this and store it as secrets in the cluster. Apart from the hassle of handling all the SSH keys and updating the bastion host each time, we would have to make sure that users will not be able to get SSH access to the bastion host deployment manually, outside the CLI. This was difficult since the CLI would need access to the private key in order to connect to the bastion host anyway. This would mean that users could SSH into the bastion host after digging through the CLI gem's source, and possibly even exec into any deployment in the cluster if they knew what they were looking for. Users with malicious intent could possibly bring down the bastion host itself once they're inside, rendering the exec command unusable.

We thought about installing kubectl inside the bastion host with a restrictive

RBAC so that we

can run kubectl exec from inside the bastion host, also while making sure that

users could not run anything destructive from inside the bastion host. But this

just adds more moving parts to the system. The SSH proxy approach makes sense if

you have to expose your cluster among developers for internal use, but it is not

ideal when you are building a CLI for public use.

After figuring out how not to solve the problem, we came to the conclusion that using websockets was the better approach for now. We already have the dyno-console-manager app, which we are using for the shell access from the web dashboard, we updated the same to handle connections from the CLI. From the CLI's end, we wrote a simple WebSocket client and handled the parsing of user inputs and printing the responses from the shell. All of the commands you enter would be run on the console pod through the websocket connection.

Streaming logs to the terminal

Live logs were streamed to the web dashboard using websockets too. This was also

handled by the above-mentioned dyno-console-manager. Since we had written a Ruby

websocket client for the exec command, we decided to use the same approach

with logs too.

The CLI would send a request to the dyno-console-manager with the app’s name and

the dyno-console-manager would run kubectl logs for your app's deployment and

stream it back to the CLI via a websocket connection it creates.

We took our time with architecting the NeetoDeploy CLI, but thanks to that we learnt a lot about designing robust systems that would scale in the long term.

If your application runs on Heroku, you can deploy it on NeetoDeploy without any change. If you want to give NeetoDeploy a try, then please send us an email at [email protected].

If you have questions about NeetoDeploy or want to see the journey, follow NeetoDeploy on X. You can also join our community Slack to chat with us about any Neeto product.

Follow @bigbinary on X. Check out our full blog archive.