How we fixed app downtime issue in NeetoDeploy

July 9, 2024

We are building NeetoDeploy, a compelling alternative to Heroku. Stay updated by following NeetoDeploy on Twitter and reading our blog.

At neeto we are building 20+ applications, and most of our applications are running in NeetoDeploy. Once we migrated from Heroku to NeetoDeploy, we started getting 520 response code for our applications. This issue was occurring randomly and rarely.

What is 520 response code?

A 520 response code happens when the connection is started on the origin web server, but the request is not completed. This could be due to server crashes or the inability to handle the incoming requests because of insufficient resources.

When we looked at our logs closely, we found that all the 520 response code situations occurred when we restarted or deployed the app. From this, we concluded that the new pods are failing to handle requests from the client initially and working fine after some time.

What is wrong with new pods?

Once our investigation narrowed down to the new pods, we quickly realized that requests are arriving at the server even when the server is not fully ready yet to take new requests.

When we create a new pod in Kubernetes, it is marked as "Ready", and requests are sent to it as soon as its containers start. However, the servers initiated within these containers may require additional time to boot up and to become ready to accept the requests fully.

Let's try restarting the application

$ kubectl rollout restart deployment bling-staging-web

As we can see, a new container is getting created for the new pod. The READY status for the new pod is 0. It means it's not yet READY.

NAME READY STATUS RESTARTS AGE

bling-staging-web-656f74d9d-6kpzz 1/1 Running 0 2m8s

bling-staging-web-79fc6f978-cdjf5 0/1 ContainerCreating 0 5s

Now we can see that the new pod is marked as READY (1 out of 1), and the old one is terminating.

NAME READY STATUS RESTARTS AGE

bling-staging-web-656f74d9d-6kpzz 0/1 Terminating 0 2m9s

bling-staging-web-79fc6f978-cdjf5 1/1 Running 0 6s

The new pod is shown as READY as soon as the container was created. But on

checking the logs, we could see that the server was still starting up and not

ready yet.

[1] Puma starting in cluster mode...

[1] Installing dependencies...

From the above observation, we understood that the pod is marked as "READY" right after the container is created. Consequently, requests are received even before the server is fully prepared to serve them, and they get a 520 response code.

Solution

To fix this issue, we must ensure that pods are marked as "Ready" only after the server is up and ready to accept the requests. We can do this by using Kubernetes health probes. More than six years ago we wrote a blog on how we can leverage the readiness and liveness probes of Kubernetes.

Adding Startup probe

Initially, we only added Startup probe since we had a problem with the boot-up phase. You can read more about the configuration settings here.

The following configuration will add the Startup probe for the deployments:

startupProbe:

failureThreshold: 10

httpGet:

path: /health_check

port: 3000

scheme: HTTP

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 60

initialDelaySeconds: 10

/health_check is a route in the application that is expected to return a 200

response code if all is going well. Now, let's restart the application again

after adding the Startup probe.

Container is created for the new pod, but the pod is still not "Ready".

NAME READY STATUS RESTARTS AGE

bling-staging-web-656f74d9d-6kpzz 1/1 Running 0 2m8s

bling-staging-web-79fc6f978-cdjf5 0/1 Running 0 5s

The new pod is marked as "Ready", and the old one is "Terminating".

NAME READY STATUS RESTARTS AGE

bling-staging-web-656f74d9d-6kpzz 0/1 Terminating 0 2m38s

bling-staging-web-79fc6f978-cdjf5 1/1 Running 0 35s

If we check the logs, we can see the health check request:

[1] Puma starting in cluster mode...

[1] Installing dependencies...

[1] * Puma version: 6.3.1 (ruby 3.2.2-p53) ("Mugi No Toki Itaru")

[1] * Min threads: 5

[1] * Max threads: 5

[1] * Environment: heroku

[1] * Master PID: 1

[1] * Workers: 1

[1] * Restarts: (✔) hot (✔) phased

[1] * Listening on http://0.0.0.0:3000

[1] Use Ctrl-C to stop

[2024-02-10T02:40:48.944785 #23] INFO -- : [bb9e756a-51cc-4d6b-9a4a-96b0464f6740] Started GET "/health_check" for 192.168.120.195 at 2024-02-10 02:40:48 +0000

[2024-02-10T02:40:48.946148 #23] INFO -- : [bb9e756a-51cc-4d6b-9a4a-96b0464f6740] Processing by HealthCheckController#healthy as */*

[2024-02-10T02:40:48.949292 #23] INFO -- : [bb9e756a-51cc-4d6b-9a4a-96b0464f6740] Completed 200 OK in 3ms (Allocations: 691)

Now, the pod is marked as "Ready" only after the health check succeeds, in other words, only when the server is prepared to accept the requests.

Fixing the Startup probe for production applications

Once we released the health check for our deployments, we found that health checks were failing for all production applications but working for staging and review applications.

We were getting the following error in our production applications.

Startup probe failed: Get "https://192.168.43.231:3000/health_check": http: server gave HTTP response to HTTPS client

2024-02-12 06:40:04 +0000 HTTP parse error, malformed request: #<Puma::HttpParserError: Invalid HTTP format, parsing fails. Are you trying to open an SSL connection to a non-SSL Puma?>

From the above logs, it was clear that the issue was related to SSL

configuration. On comparing the production environment configuration with the

others, we figured out that we had enabled

force_ssl

for production applications. The force_ssl=true setting ensures that all

incoming requests are SSL encrypted and will automatically redirect to their SSL

counterparts.

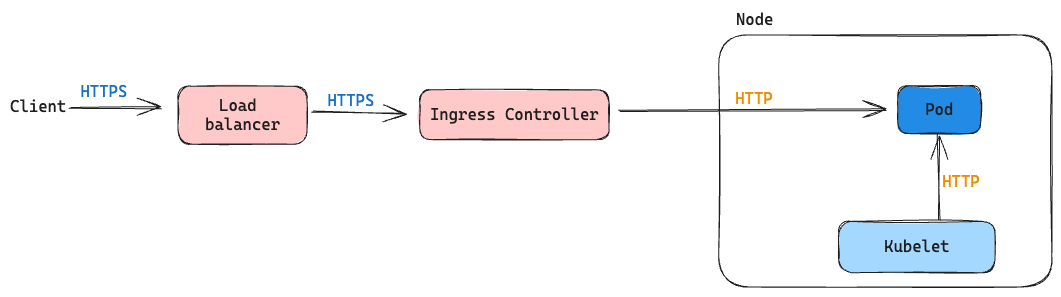

The following diagram broadly shows the path of an incoming request.

From the above diagram, we can infer the following things:

- SSL verification is happening in the ingress controller and not in the server.

- Client requests are going through the ingress controller before reaching the server.

- Request from ingress controller to the pod is an HTTP request.

- The HTTP health check requests are directly sent from Kubelet to the pod and do not go through the ingress controller.

Here is how our health check request works.

- Kubelet sends an HTTP request to the server directly.

- Since

force_sslis enabled, ActionDispatch::SSL middleware redirects the request to HTTPS. - When the HTTPS request reaches the server, Puma throws

Are you trying to open an SSL connection to a non-SSL Puma?error since no SSL certificates are configured with the server.

The solution to our problem lies in understanding why only the health check

request is rejected, whereas the request from the ingress controller is not,

even though both are HTTP requests. This is because ingress controller sets some

headers before forwarding to the pod, and the header we are concerned about is

X-FORWARDED-PROTO.

The X-Forwarded-Proto header contains the HTTP/HTTPS scheme the client used to

access the application. When a client makes an HTTPS request, the ingress

controller terminates the SSL/TLS connection and forwards the request to the

backend service using plain HTTP after adding theX-Forwarded-Proto along with

the other headers.

Everything started working after adding the X-Forwarded-Proto header to our

startup probe request.

startupProbe:

failureThreshold: 10

httpGet:

httpHeaders:

- name: X-FORWARDED-PROTO

value: https

path: <%= health_check_url %>

port: <%= port %>

scheme: HTTP

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 60

initialDelaySeconds: 10

We also added Readiness and Liveness probes for our deployments.

If your application runs on Heroku, you can deploy it on NeetoDeploy without any change. If you want to give NeetoDeploy a try, then please send us an email at [email protected].

If you have questions about NeetoDeploy or want to see the journey, follow NeetoDeploy on Twitter. You can also join our Slack community to chat with us about any Neeto product.

Follow @bigbinary on X. Check out our full blog archive.