Understanding Active Record Connection Pooling

May 13, 2025

This is Part 5 of our blog series on scaling Rails applications.

Database Connection Pooling

When a Rails application needs to interact with a database, it establishes a connection, which is a dedicated communication channel between the application and the database server. When a new request comes to Rails, the operation can be handled like this.

- Create a connection

- Do database operation

- Close the connection

When the next request comes, then repeat the above process.

Creating new database connections is an expensive operation - it takes time to establish the connection, authenticate, and set up the communication channel. It means that every single time a request comes, we are spending time in setting up the connection.

Wouldn't it be better to store the established connection to somewhere and when a new request comes, then get the pre-established connection from this pool. This expedites the process since we don't need to create a connection and close a connection in every single request.

The new process might look like this.

- Create a connection

- Do database operation

- Put the connection in a pool

Now, when a new request comes, the operation will look like this.

- Get the connection from the pool

- Do database operation

- Return the connection to the pool

Database connection pooling is a performance optimization technique that maintains a set of reusable database connections.

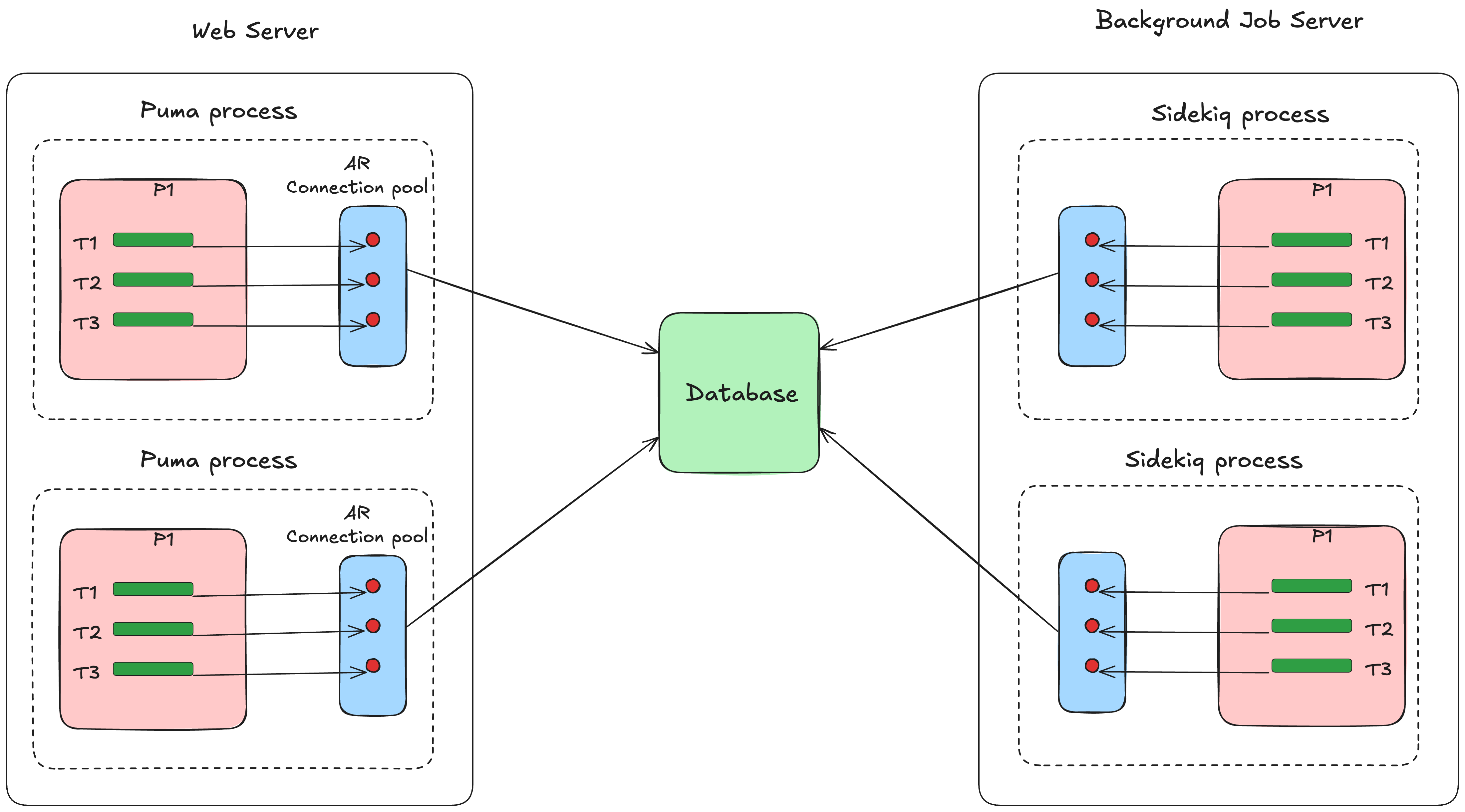

Active Record Connection Pool Implementation

Active Record manages a pool of database connections for each web and background process. Each process will have a connection pool of its own, which means a Rails application running with multiple processes (like Puma processes, Sidekiq processes) will have multiple independent connection pools. The pool is a set of database connections that are shared among threads in the same process.

Note that the pooling happens at the process level. A thread from process A can't get a connection from process B.

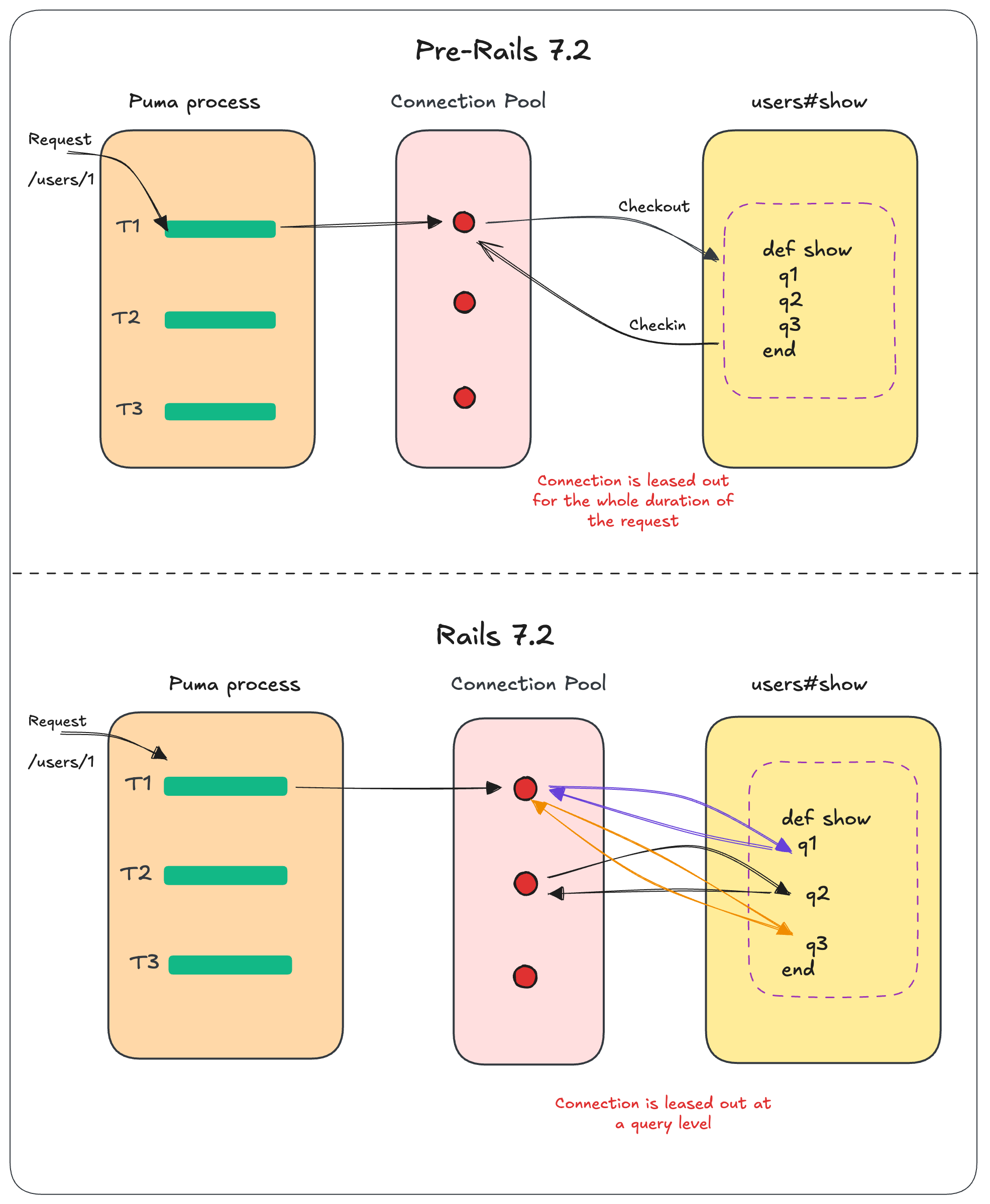

When a connection is needed, the thread checks out a connection from the pool, perform operations, and then returns the connection to the pool. This is done at a query level now. For each individual query, a connection is leased, used, and then returned back to the pool.

Pre Rails 7.2, the connection used to be leased and held till the end of the request if it is a web request and till the job is done if it is a background job. This was a problem for applications that spent a lot of time doing I/O. The thread will hog the connection for the entire duration of the I/O operation limiting the number of queries that can be executed concurrently. To facilitate this change and make query caching work, the query cache has been updated to be owned by the pool.

This means that the query cache is now shared across all connections in the pool. Previously, each connection had its own query cache. As the whole request used the same connection, this was fine. But now, as the connection is leased for each query, the query cache needs to be shared across all connections in the pool.

Connection Pool Configuration Options

Active Record's connection pool behavior can be customized through several configuration options in the database.yml file:

- pool:

Sets the maximum number of connections the pool will maintain. The default is

tied to

RAILS_MAX_THREADS, but you can set it to any value. There is a small problem when you set it toRAILS_MAX_THREADSwhich we'll discuss later. - checkout timeout:

Determines how long a thread will wait to get a connection before timing out.

The default is 5 seconds. If all connections are in use and a thread waits

longer than this value, an

ActiveRecord::ConnectionTimeoutErrorexception will be raised. - idle timeout: Specifies how long a connection can remain idle before it's removed from the pool. The default is 300 seconds. This helps reclaim resources from connections that aren't being used.

- reaping frequency: Controls how often the Reaper(which we'll discuss shortly) process runs to remove dead or idle connections. The default is 60 seconds.

Active Record Connection Pool Reaper

Database connections can sometimes become "dead" due to issues like database restarts, network problems etc. Active Record provides Reaper to handle this.

The Reaper periodically checks connections in the pool and removes dead connections as well as idle connections that have been checked out for a long time.

It acts somewhat like a garbage collector for database connections. The Reaper

uses the idle_timeout setting to determine how long a connection can remain

idle before being removed, tracking idle time based on when connections were

last used.

There is another configuration option called reaping_frequency that controls

how often the Reaper runs to remove dead or idle connections from the pool. By

default, this is set to 60 seconds. It means the Reaper will wake up once every

minute to perform its maintenance tasks.

If your application is spiky and receives a lot of traffic in surges, then set the reaping frequency and idle timeout to a lower value. This will ensure that the reaper runs more frequently and removes idle connections more quickly, helping to keep the connection pool healthy and responsive.

Why are idle connections bad?

Idle database connections can significantly impact database performance for several interconnected reasons:

Memory Consumption: Each database connection, even when idle, maintains its own memory allocation. The database must reserve memory for session state, buffers, user context, and transaction workspace. This memory remains allocated even when the connection isn't doing any work. For example, if each connection uses 10 MB of memory, 100 idle connections would unnecessarily consume 1 GB of your database's memory that could otherwise be used for active queries, caching, or other productive work.

CPU overhead: While "idle" suggests no activity, the database still performs regular maintenance work for each connection. It must monitor connection health via keepalive checks, manage process tables etc.

The crucial issue is that the overhead of having idle connections scales non-linearly. As we add more idle connections, the database spends an increasing proportion of its CPU time just managing these connections rather than processing actual queries. Thankfully, the reaper handles this for us.

How many database connections will the web and background processes utilize at maximum?

As we learned, the connection pool is managed at the process level. Each Rails process maintains its own pool.

- In web processes (Puma):

Each Puma process is a separate process with its own connection pool. In a

process, each thread can check out one connection. Therefore, maximum

connections needed per process equals max_threads setting in Puma.

- In background processes (Sidekiq):

Sidekiq runs as a separate process with its own connection pool. The Sidekiq

concurrency setting determines the number of threads and therefore the maximum

connections needed equals the concurrency value.

Note: If you're using Sidekiq swarm and running multiple Sidekiq processes, then take that it into account.

We can calculate the total potential connections for a typical application as shown below.

Web connections = Number of web dynos * Number of Puma processes

* `max_threads` value

Background connections = Number of worker dynos * Number of Sidekiq processes

* Threads per process

Total number of connections = Web connections + Background connections

The key thing to note here is that the database needs to support at least these many simultaneous connections.

Note that if preboot is enabled, then the maximum number of connections will be double the above value. This is because during the release phase, there is a small window in which both the old dynos and new dynos are running.

In Rails 7,

load_async

was introduced which allows us to run database queries asynchronously in a

background thread. When load_async is in used, the calculation for maximum

number of connections needed changes a bit. First, let's understand how

load_async works.

How load_async works

load_async allows Rails to execute database queries asynchronously in

background threads. Unlike regular ActiveRecord queries which are lazily loaded,

load_async queries are always executed immediately in background threads and

joined to the main thread when results are needed.

The async executor is configured through the

config.active_record.async_query_executor setting. There are three possible

configurations:

nil(default): Async queries are disabled, andload_asyncwill execute queries synchronously.:global_thread_pool: Uses a single thread pool for all database connections.:multi_thread_pool: Uses separate thread pools for each database connection.

Rails provides a configuration option named

global_executor_concurrency

(default: 4) that controls how many concurrent async queries can run per

process. So, the maximum number of connections per process when load_async is

used.

Maximum connections per process = Process level concurrency

+ global_executor_concurrency + 1

Here Process level concurrency means max_threads for the Puma process and

sidekiq_conurrency for the Sidekiq process.

The "+1" accounts for the main control thread, which may occasionally need a connection (e.g., during model introspection at class load time).

There is a nice calculator created by the folks at Judoscale which can be used to calculate the maximum number of connections needed for your application.

Setting Database Pool Size Configuration

Our database.yml file has the following line.

pool: <%%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

We know that a thread doesn't take more than one DB connection. So the maximum number of connections needed per pool is equal to the total number of threads. So the above configuration looks fine.

However this doesn't take into account whether we use load_async or not. If we

use load_async, then the number of connections needed per process will be

RAILS_MAX_THREADS + global_executor_concurrency + 1.

Do we really need to go into this much detail to determine the pool size? Turns out there is a much easier answer.

Almost all database hosting providers mention the maximum number of connections allowed in their plan. We can just set the pool config to the maximum number of connections supported by our database plan. Let us say we have a Standard-0 database on Heroku. It supports up to 120 connections. So we can set the pool config to 120.

pool: 120

We can do this because the database connections are lazily initialized in the pool. The application doesn't create more database connections than it needs. So we needn't be conservative here.

The only thing we need to ensure is that the maximum connection utilization doesn't exceed the database plan limit. If that happens, then we have another solution - PgBouncer.

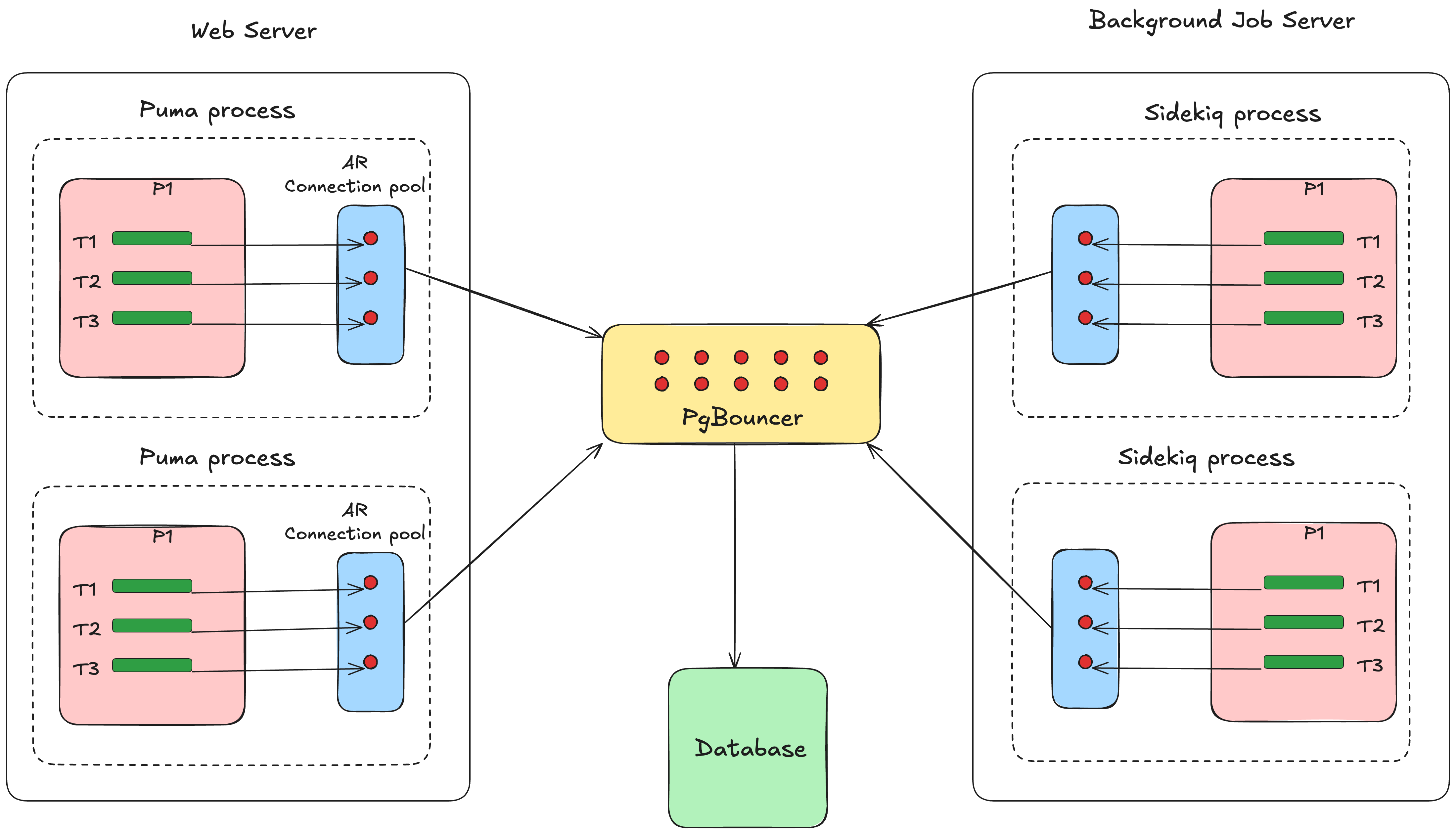

PgBouncer

PgBouncer is a lightweight connection pooler for PostgreSQL. It sits between our application(s) and our PostgreSQL database and manages a pool of database connections.

While both PgBouncer and Active Record provide connection pooling, they operate at different levels and serve different purposes.

Active Record connection pool operates within a single Ruby process and manages

connections for threads within the process, whereas PgBouncer is an external

connection pooler that sits between the application and the database and manages

connections across all the application processes.

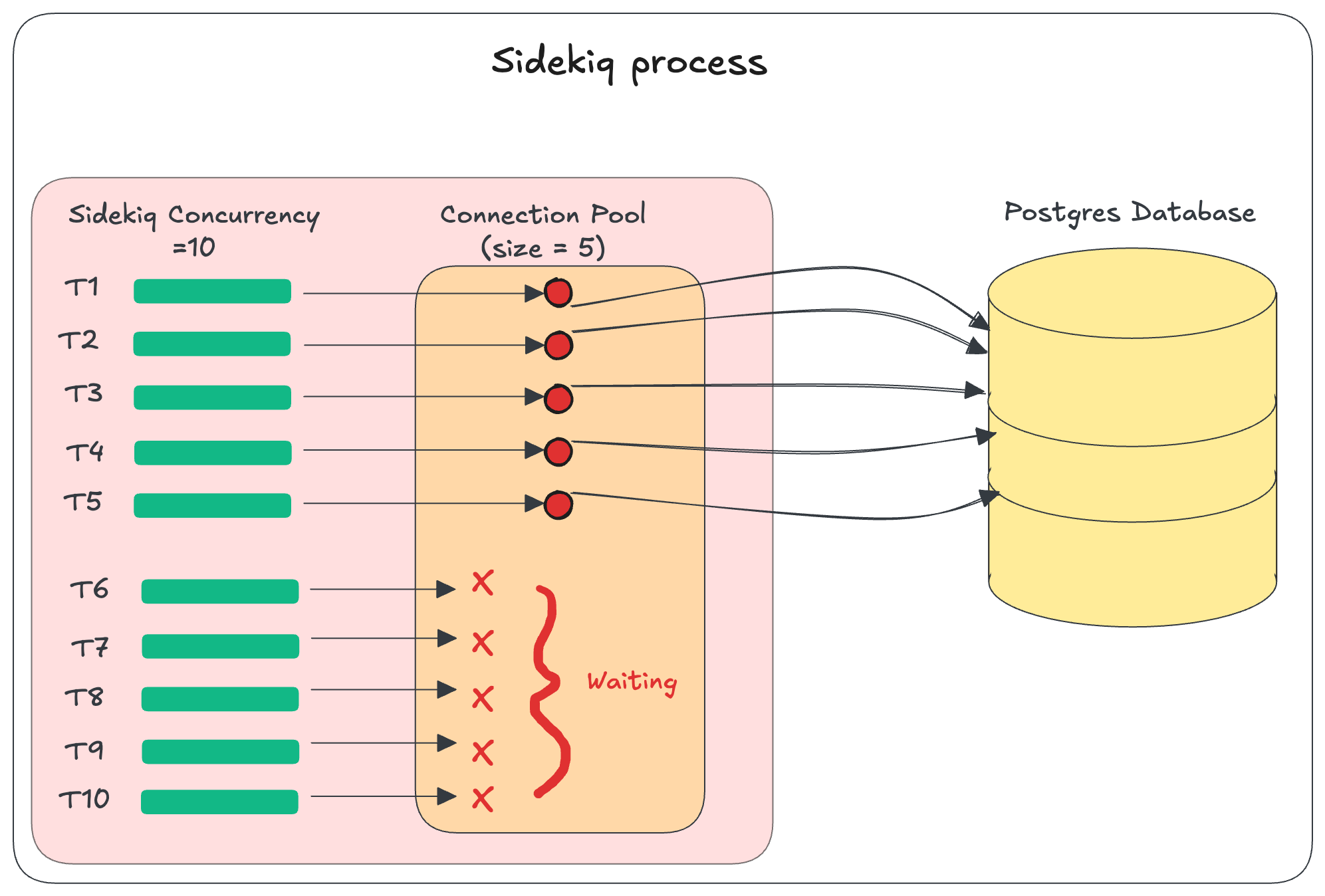

The dreaded ActiveRecord::ConnectionTimeoutError

This error comes up when a thread waits more than checkout_timeout seconds to

acquire a connection. This usually happens when the pool size is set to a

value less than the concurrency.

For example, lets say we have set the Sidekiq concurrency to 10 and pool size to 5. If we have more than 5 threads wanting a connection at any point of time, the threads will have to wait.

What's the solution? As we discussed earlier setting the pool to a really high

value should fix the error in most cases.

Even after setting the config correctly, ActiveRecord::ConnectionTimeoutError

can still happen and it could be puzzling. Let is discuss a few scenarios where

this can happen.

Custom code spinning up new threads and taking up connections

class SomeService

def process

threads = []

5.times do |index|

threads << Thread.new do

ActiveRecord::Base.connection.execute("select pg_sleep(5);")

end

end

threads.each(&:join)

end

end

Here 5 threads are spun up. Note that these threads also take up connections from the same pool allotted to the process.

Active Storage proxy mode

Even if our application code is not spinning up new threads, Rails itself can sometimes spin up additional threads. For example Active Storage configured in proxy mode.

Active Storage’s proxy controllers 1, 2 generate responses as streams, which require dedicated threads for processing.

This means that when serving an Active Storage file through one of these proxy controllers, Rails actually utilizes two separate threads - one for the main request and another for the streaming process. Each of these threads requires its own separate database connection from the ActiveRecord connection pool.

Rack timeouts

rack-timeout is commonly used across Rails applications to automatically terminate long-running requests. While it helps prevent server resources from being tied up by slow requests, it can also cause a few issues.

Rack timeout uses Ruby's

Thread#raise API to terminate

requests that exceed the configured timeout. When a timeout occurs, rack-timeout

raises a Rack::Timeout::RequestTimeoutException from another thread. If this

exception is raised while a thread is in the middle of database operations, it

can prevent proper cleanup of database connections.

Tracking down ActiveRecord::ConnectionTimeoutErrors

If we still frequently see ActiveRecord::ConnectionTimeoutError exceptions in

our application, we can get additional context by logging the connection pool

info to our error monitoring service. This can help identify which all threads

were holding onto the connections when the error occurred.

config.before_notify do |notice|

if notice.error_class == "ActiveRecord::ConnectionTimeoutError"

notice.context = { connection_pool_info: detailed_connection_pool_info }

end

end

def detailed_connection_pool_info

connection_info = {}

ActiveRecord::Base.connection_pool.connections.each_with_index do |conn, index|

connection_info["connection_#{index + 1}"] = conn.owner ? conn.owner.inspect : "[UNUSED]"

end

connection_info["current_thread"] = Thread.current.inspect

connection_info

end

<thread_obj>.inspect gives us the name, id and status of the thread. For

example, if one entry in the hash looks like

#<Thread:0x00006a42eca73ba0@puma srv tp 002 /app/.../gems/puma-6.2.2/lib/puma/thread_pool.rb:106 sleep_forever>

then it means that the connection is taken up by a Puma thread.

Monitoring Active Record Connection Pool Stats

If we want to monitor Active Record Connection Pool stats, then periodically we need to send the stats to a service provider which can display the data graphically. For periodically checking the stat, we are using rufus-scheduler gem. For collecting the data and showing the data we are using NewRelic but you can use any APM of your choice. We have configured to send the pool stat every 15 seconds.

Here is the gist which collects and sends data.

This was Part 5 of our blog series on scaling Rails applications. If any part of the blog is not clear to you then please write to us at LinkedIn, Twitter or BigBinary website.

Follow @bigbinary on X. Check out our full blog archive.