Reduce asset delivery time from 30 to 3 seconds with CDN

November 24, 2020

AceInvoice, one of BigBinary's products, was facing

high page load times. AceInvoice is a React.js application, and the size of

application.js has gone up to 689kB compressed. Folks from India would

sometimes have to wait up to 30 whole seconds for the application.js to load.

AceInvoice is hosted at heroku. Heroku serves assets from a limited number of servers in select locations. The farther you are from these servers, the higher the latency of asset delivery. This is where a CDN like Cloudfront can help.

How does Cloudfront work?

Simply stated CDNs are like caches. CDNs caches recently viewed items and then these CDNs can access stuff from the cache at great speeds.

Let’s take a practical example

- Create a Cloudfront distribution

aceinvoice.cloudfront.comwhich points toapp.aceinvoice.com. - The browser makes a request to

abc.cloudfront.com/images/peter.jpgfor the first time. - Cloudfront checks if it has anything cached against

/images/peter.jpg. Since it’s the first time it’s encountering this URL it won’t find anything, so it’s a cache miss. - Cloudfront forwards this request back to the origin, which in this case is

app.aceinvoice.com/images/peter.jpg. The browser gets back the image. - During this process, Cloudfront caches the resource. Think of it like a

key-value pair where

/images/peter.jgis the key and the actual image is the value.

Now let's consider another scenario

- Another browser makes a request to the same resource.

- Cloudfront checks for cached items for that particular path.

- Cloudfront finds the cached resource. It’s a cache hit!

- Cloudfront directly serves the resource back to the browser without hitting the origin server.

So how is this faster?

Cloudfront has 100+ edge locations scattered around the world. There will always be an edge that’s close to you. Cached resources are immediately made available from all these edge locations. This reduces latency.

What are the caveats?

The biggest issue with using a CDN is properly invalidating caches. Let’s

continue with the above example. If the peter.jpg file is updated, Cloudfront

is unaware of this change. It’ll keep serving the old file whenever a request is

made to that path.

The easiest way to invalidate the cache is by using a hash in the asset name

that changes on deploy. Rails handles this by default. After deploying the

application, the path to the aforementioned asset might be

images/Peter-5nbd44gfae.jpg. When a request comes to this path, Cloudfront

caches it and uses the cache for subsequent requests.

But on the next deploy, the path to the same asset changes. Since Cloudfront doesn’t have anything cached for that URL, it will check the origin and get the latest asset.

How to set up Cloudfront with Rails

Rails makes it easy to set up an asset host. In the

config/environments/production.rb file, add the following line.

config.action_controller.asset_host = ENV[‘CLOUDFRONT_ENDPOINT’]

By doing this, Rails will look for all the assets in Cloudfront.

Let's consider the application.js asset. In the main .erb file the src for

application.js was /packs/js/application.js.

Once we make this change it will be

https://ENV[CLOUDFRONT_ENDPOINT]/packs/js/application.js.

We will be setting the environment variable in Heroku shortly.

Creating a Cloudfront distribution

-

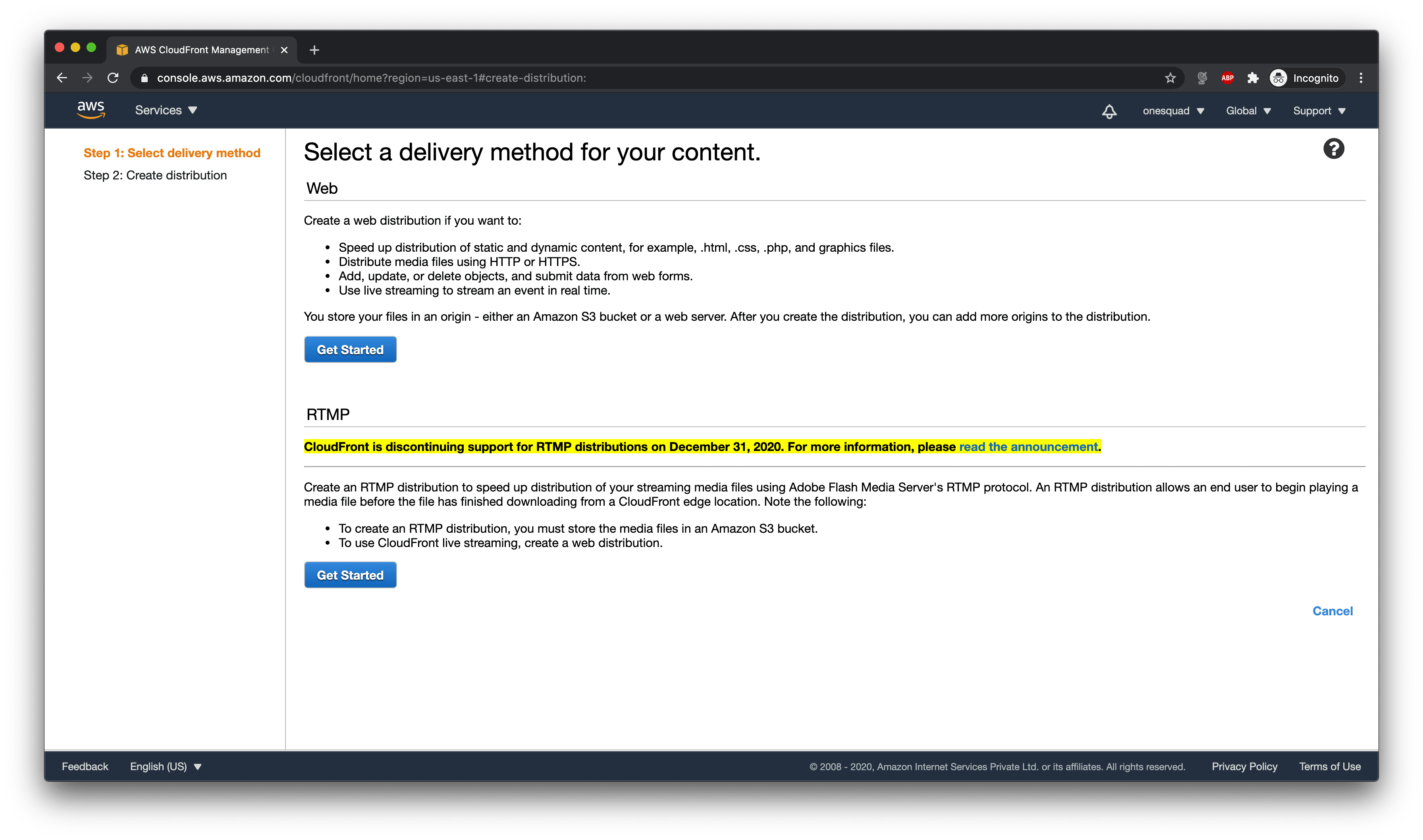

Go to AWS Management Console -> Cloudfront -> Create Distribution Choose Web as the delivery method.

-

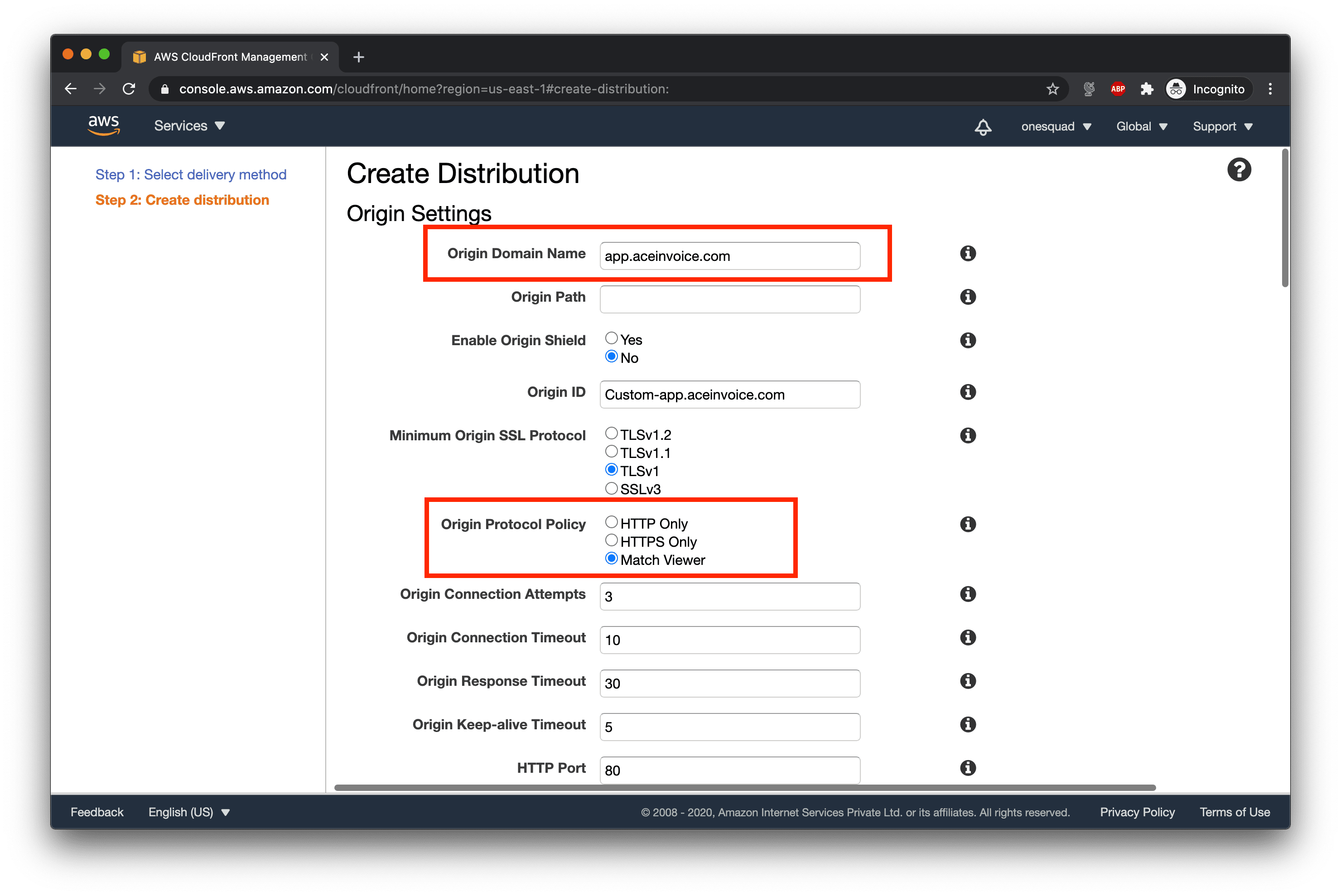

In origin domain name, specify the path to your server. In this example it is

app.aceinvoice.com. In origin protocol policy, choose Match viewer so that the same protocol as the main request is used when Cloudfront forwards requests to the origin server. You can leave the other settings unchanged.

-

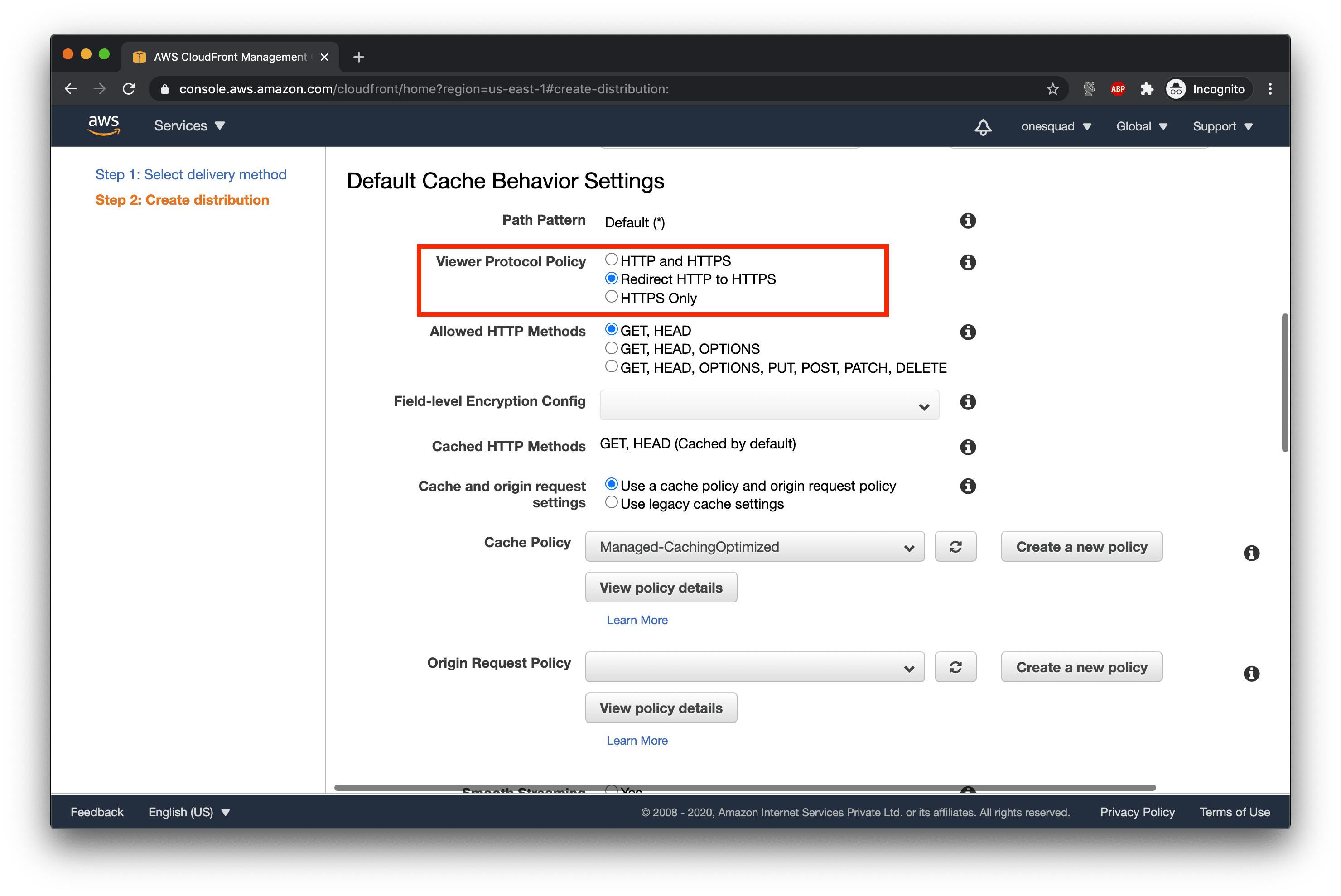

In the cache behaviour settings, change viewer protocol policy to Redirect HTTP to HTTPS. You can leave the other settings untouched.

-

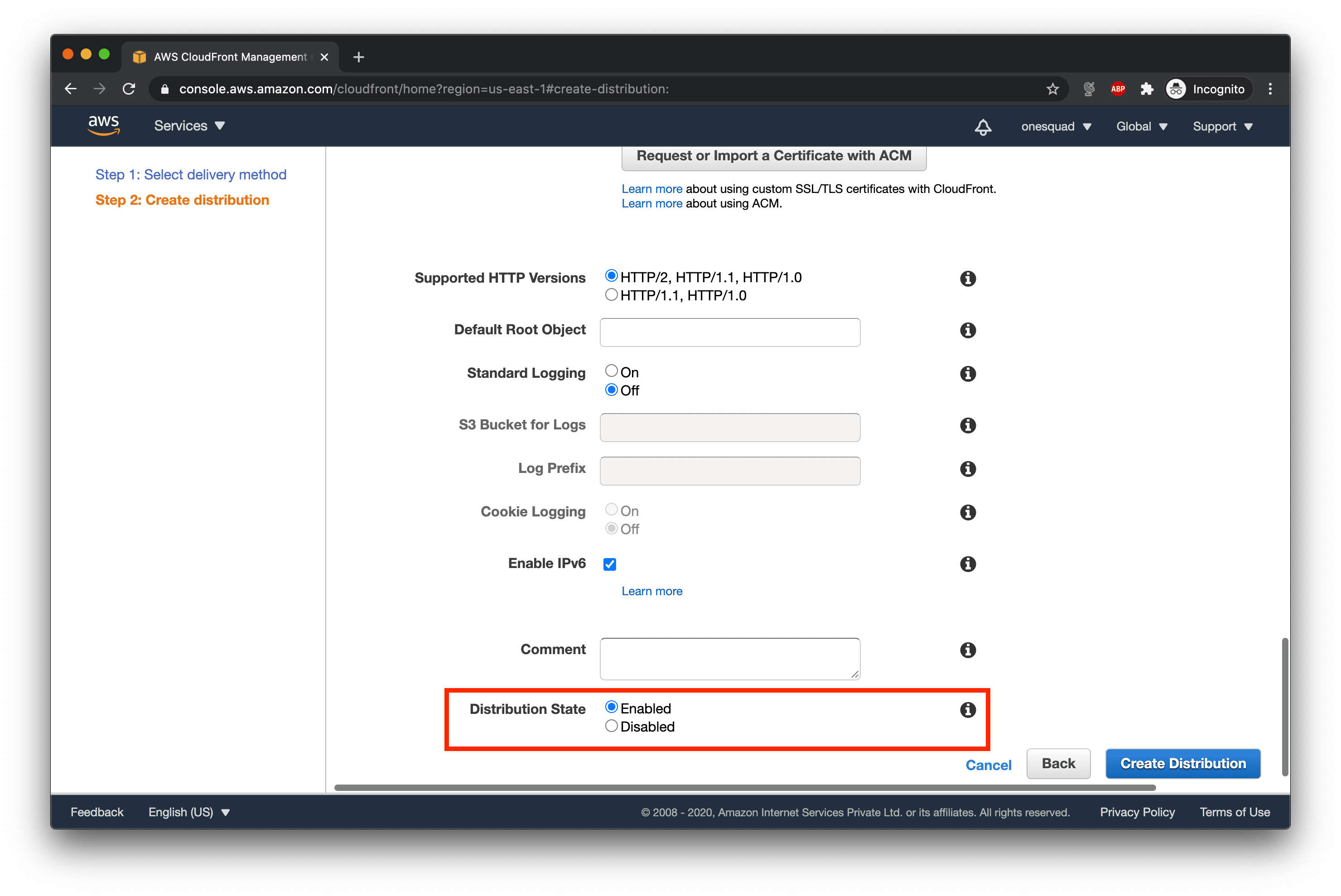

At the bottom of the page switch the distribution state to Enabled and click on Create Distribution.

-

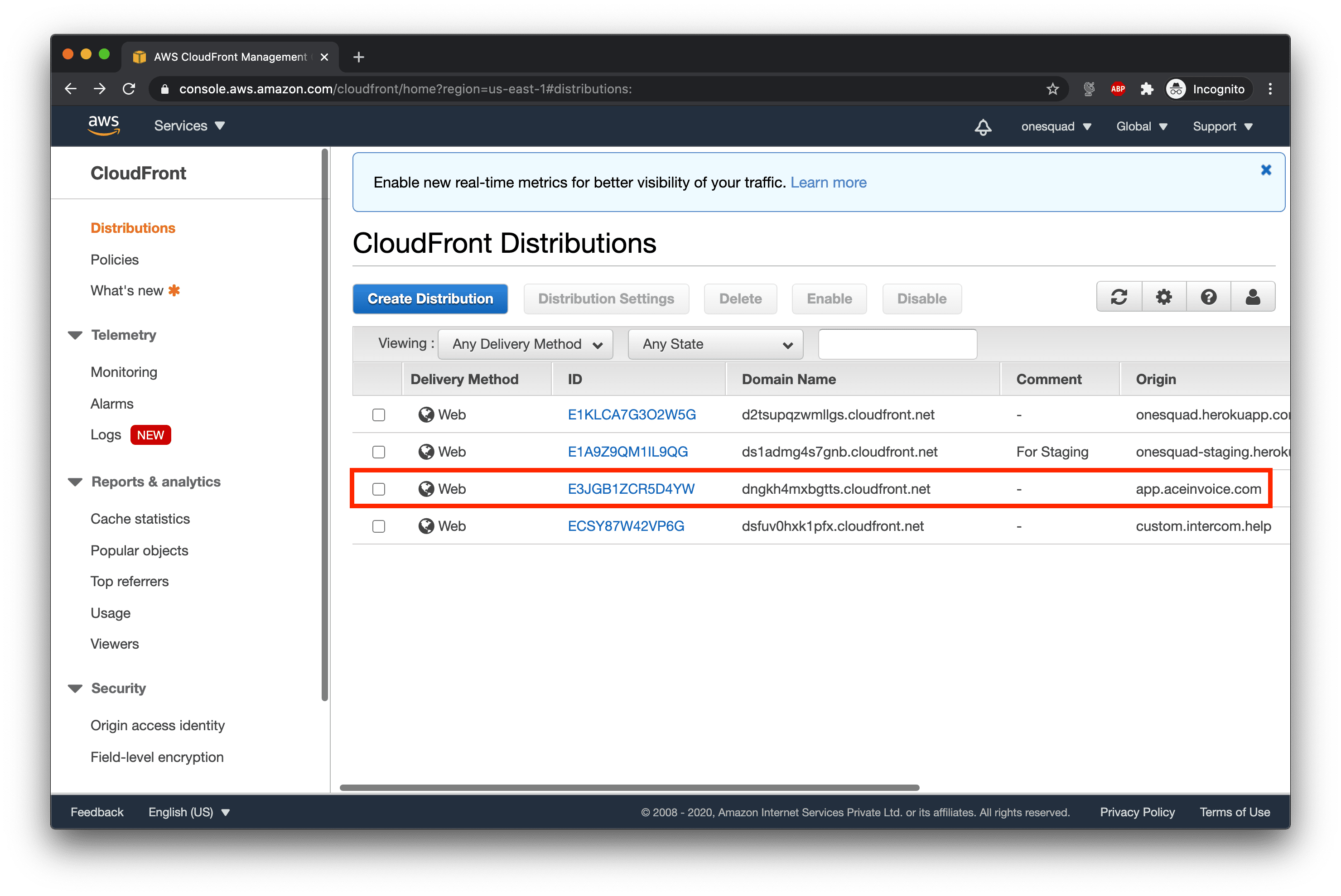

Note the Domain name of your distribution from the Cloudfront dashboard.

-

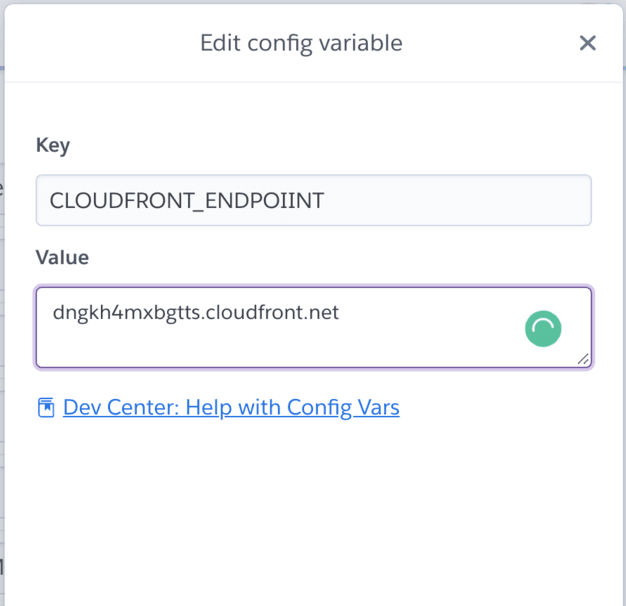

In the Heroku dashboard add the environment variable in your app.

Result

Serving time of application.js on a cold load (not cached in browser) dropped

from 30 seconds to 2-3 seconds.

Follow @bigbinary on X. Check out our full blog archive.