GVL in Ruby and the impact of GVL in scaling Rails applications

April 24, 2025

This is Part 2 of our blog series on scaling Rails applications.

Let's start from the basics. Let's see how a standard web application mostly behaves.

Web applications and CPU usage

Code in a web application typically works like this.

- Do some data manipulation.

- Make a few database calls.

- Do more calculations.

- Make some network calls.

- Do more calculations.

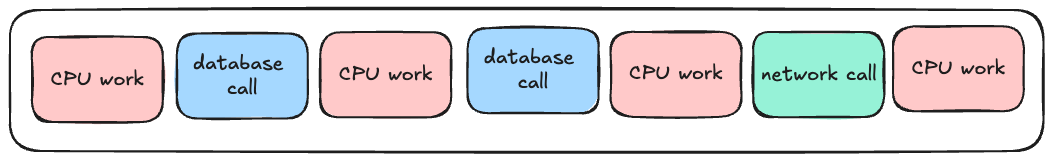

Visually, it'll look something like this.

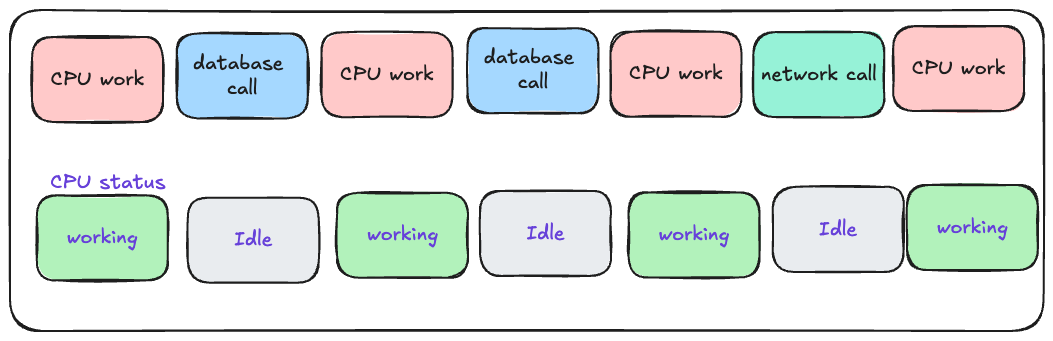

CPU work includes operations like view rendering, string manipulation, any kind of business logic processing etc. In short, anything that involves Ruby code execution can be considered CPU work. For the rest of the work, like database calls, network call etc. CPU is idle. Another way of looking at when the CPU is working and when it's idle is this picture.

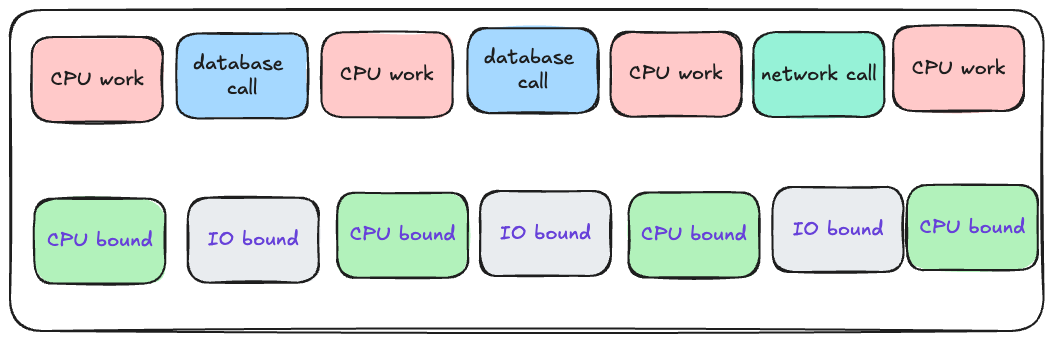

When a program is using CPU, then that portion of the code is called CPU bound and when program is not using CPU, then that portion of the code is called IO bound.

CPU bound or IO bound

Let us understand what CPU bound truly means. Consider the following piece of code.

10.times do

Net::HTTP.get(URI.parse("https://bigbinary.com"))

end

In the above code, we are hitting the BigBinary website 10 times sequentially. Running the above code takes time because making a network connection is a time-consuming process.

Let's assume the code above takes 10 seconds to finish. We want the code to run faster. So we bought a better CPU for the server. Do you think now the code will run faster?

It will not. That's because the above code is not CPU bound. CPU is not the limiting factor in this case. This code is I/O bound.

A program is CPU bound if the program will run faster if the CPU were faster.

A program is I/O bound if the program will run faster if the I/O operations were faster.

Some of the examples of I/O bound operations are:

- making database calls: reading data from tables, creating new tables etc.

- making network calls: reading data from a website, sending emails etc.

- dealing with file systems: reading files from the file system.

Previously, we saw that our CPU was idle sometimes. Now we know that the technical term for that idleness is IO bound let's update the picture.

When a program is I/O bound, the CPU is not doing anything. We don't want precious CPU cycles to be wasted. So what can we do so that the CPU is fully utilized?

So far we have been dealing with only one thread. We can increase the number of threads in the process. In this way, whenever the CPU is done executing CPU bound code of one thread and that thread is doing an I/O bound operation, then the CPU can switch and handle the work from another thread. This will ensure that the CPU is efficiently utilized. We will look at how the switching between threads works a bit later in the article.

Concurrency vs Parallelism

Concurrency and parallelism sound similar, and in your daily life, you can substitute one for another, and you will be fine. However, from the computer engineering point of view, there is a difference between work happening concurrently and work happening in parallel.

Imagine a person who has to respond to 100 emails and 100 Twitter messages. The person can reply to an email and then reply to a Twitter message, then do the same all over again: reply to an email and reply to a Twitter message.

The boss will see the count of pending emails and Twitter messages go down from 100 to 99 to 98. The boss might think that the work is happening in "parallel." But that's not true.

Technically, the work is happening concurrently. For a system to be parallel, it should have two or more actions executed simultaneously. In this case, at any given moment, the person was either responding to email or responding to Twitter.

Another way to look at it is that Concurrency is about dealing with lots of things at the same time. Parallelism is about doing lots of things at the same time.

If you find it hard to remember which one is which then remember that concurrency starts with the word con. Concurrency is the conman. It's pretending to be doing things "in parallel," but it's only doing things concurrently.

Understanding GVL in Ruby

GVL (Global VM Lock) in Ruby is a mechanism that prevents multiple threads from executing Ruby code simultaneously. The GVL acts like a traffic light in a one-lane bridge. Even if multiple cars (threads) want to cross the bridge at the same time, the traffic light (GVL) allows only one car to pass at a time. Only when one car has made it safely to the other end, the second car is allowed by the traffic light(GVL) to start.

Ruby's memory management(like garbage collection) and some other parts of Ruby are not thread-safe. Hence, GVL ensures that only one thread runs Ruby code at a time to avoid any data corruption.

When a thread "holds the GVL", it has exclusive access to modify the VM structures.

It's important to note that GVL is there to protect how Ruby works and manages Ruby's internal VM state. GVL is not there to protect our application code. It's worth repeating. The presence of GVL doesn't mean that we can write our code in a thread unsafe manner and expect Ruby to take care of all threading issues in our code.

Ruby offers tools like Mutex and concurrent-ruby gem to manage concurrent code. For example, the following code(source) is not thread safe and the GVL will not protect our code from race conditions.

from = 100_000_000

to = 0

50.times.map do

Thread.new do

while from > 0

from -= 1

to += 1

end

end

end.map(&:join)

puts "to = #{to}"

When we run this code, we might expect the result to always equal 100,000,000

since we're just moving numbers from from to to. However, if we run it

multiple times, we'll get different results.

This happens because multiple threads are trying to modify the same variables

(from and to) simultaneously without any synchronization. This is called race

condition and it happens because the operation to += 1 and from -= 1 are

non-atomic at the CPU-level. In simpler terms operation to += 1 can be written

as three CPU-level operations.

- Read current value of

to. - Add 1 to it.

- Store the result back to

to.

To fix this race condition the above code can be re-written using a Mutex.

from = 100_000_000

to = 0

lock = Mutex.new

50.times.map do

Thread.new do

while from > 0

lock.synchronize do

if from > 0

from -= 1

to += 1

end

end

end

end

end.map(&:join)

puts "to = #{to}"

It's worth nothing that Ruby implementations like JRuby and TruffleRuby don't have a GVL.

GVL dictates how many processes we will need

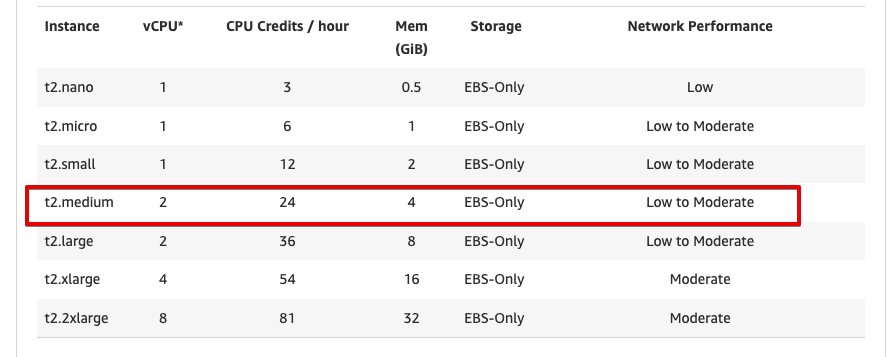

Let's say that we deploy the production app to AWS's EC2's t2.medium machine.

This machine has 2 vCPU as we can see from this chart.

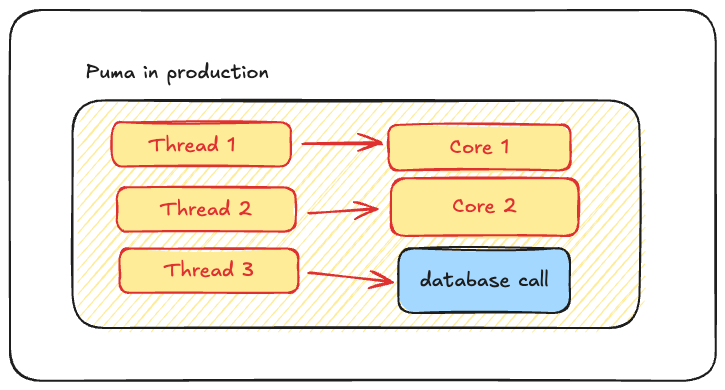

Without going into CPU vs vCPU discussion, let's keep things simple and assume that the AWS machine has two cores. So we have deployed our code on a machine with two cores but we have only one process running in production. No worries. We have three threads. So three threads can share two cores. You would think that something like this should be possible.

But it's not possible. Ruby doesn't allow it.

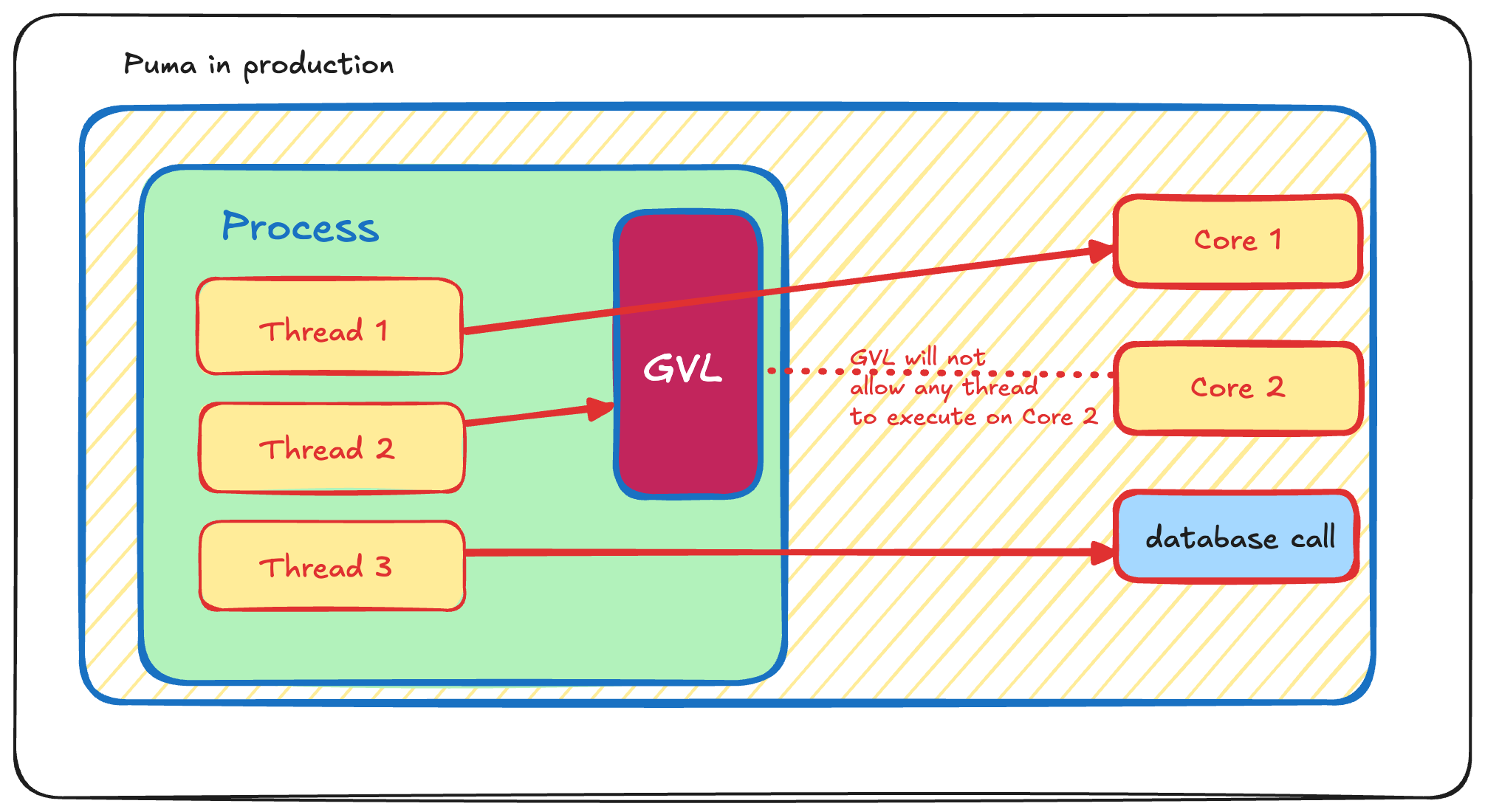

Currently, it's not possible because Thread 1 and Thread 2 belong to the same process. This is because of the Global VM Lock (GVL).

The GVL ensures that only one thread can execute CPU bound code at a time within a single Ruby process. The important thing to note here is that this lock is only for the CPU bound code and only for the same process.

In the above case, all three threads can do DB operations in parallel. But two threads of the same process can't be doing CPU operations in parallel.

We can see that "Thread 1" is using Core 1. Core 2 is available but "Thread 2" can't use Core 2. GVL won't allow it.

Again, let's revisit what GVL does. For the CPU bound code GVL will ensure that only one thread from a process can access CPU.

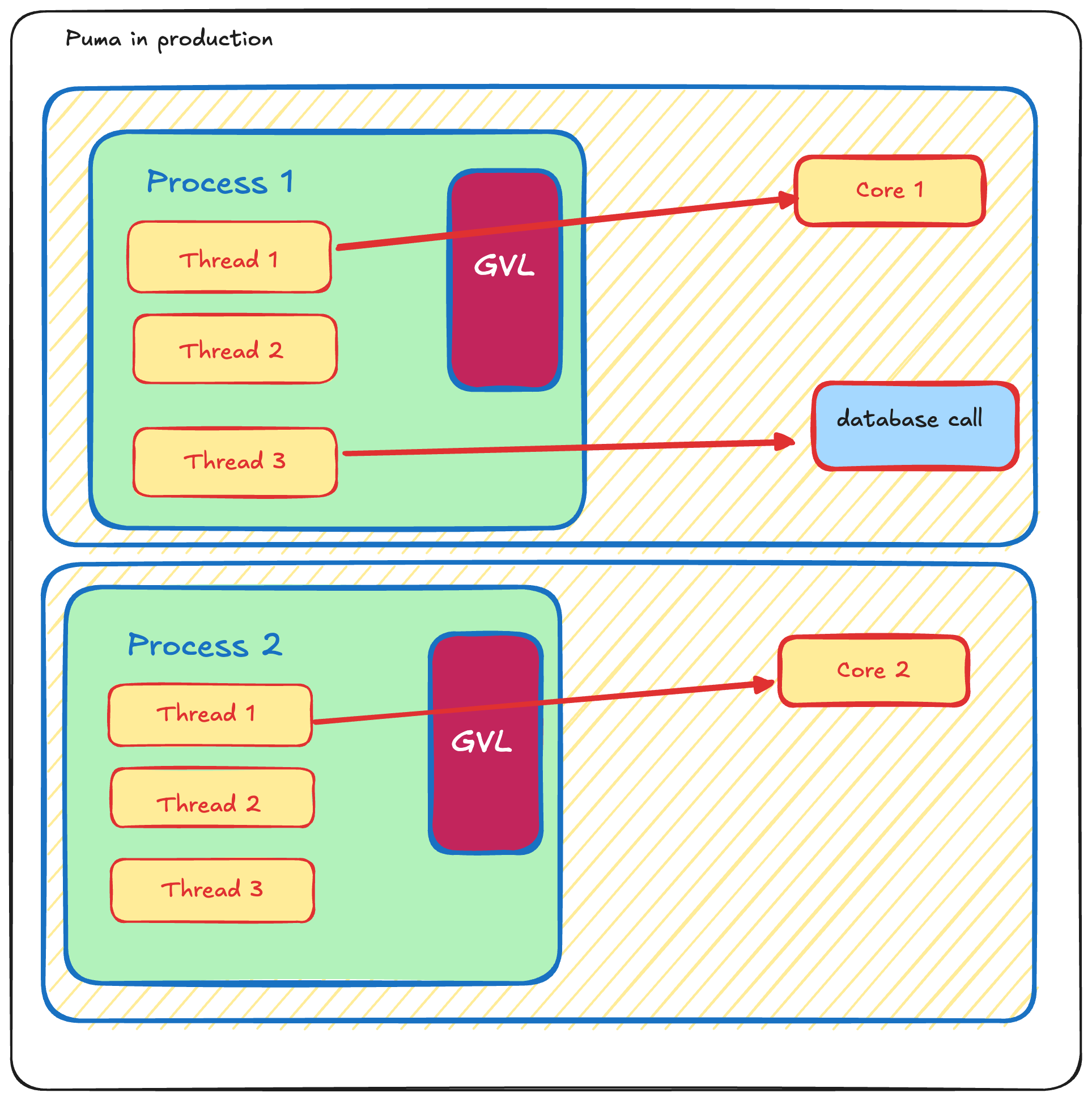

So now the question is how do we utilize Core 2. Well, the GVL is applied at a process level. Threads of the same process are not allowed to do CPU operations in parallel. Hence, the solution is to have more processes.

To have two Puma processes we need to set the value of env variable

WEB_CONCURRENCY to 2 and reboot Puma.

WEB_CONCURRENCY=2 bundle exec rails s

Now we have two processes. Now both Core 1 and Core 2 are being utilized.

What if the machine has 5 cores. Do we need 5 processes?

Yes. In that case, we will need to have 5 processes to utilize all the cores.

Therefore for achieving maximum utilization, the rule of thumb is that the

number of processes i.e WEB_CONCURRENCY should be set to the number of cores

available in the machine.

Thread switching

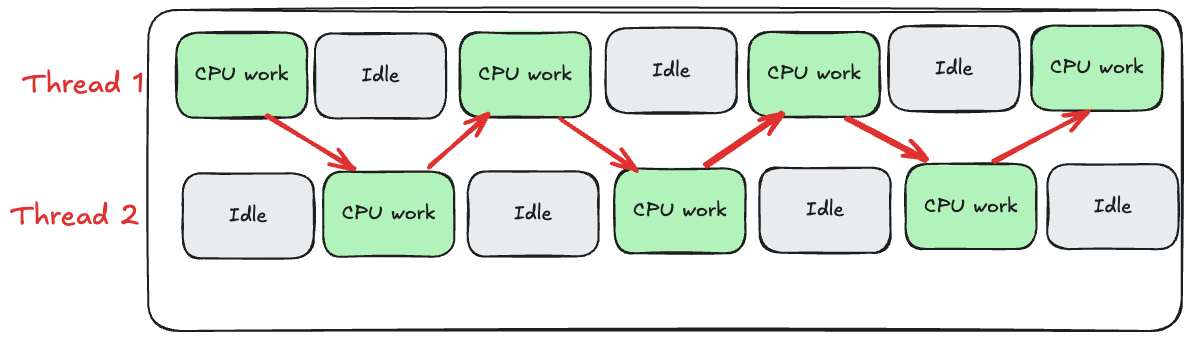

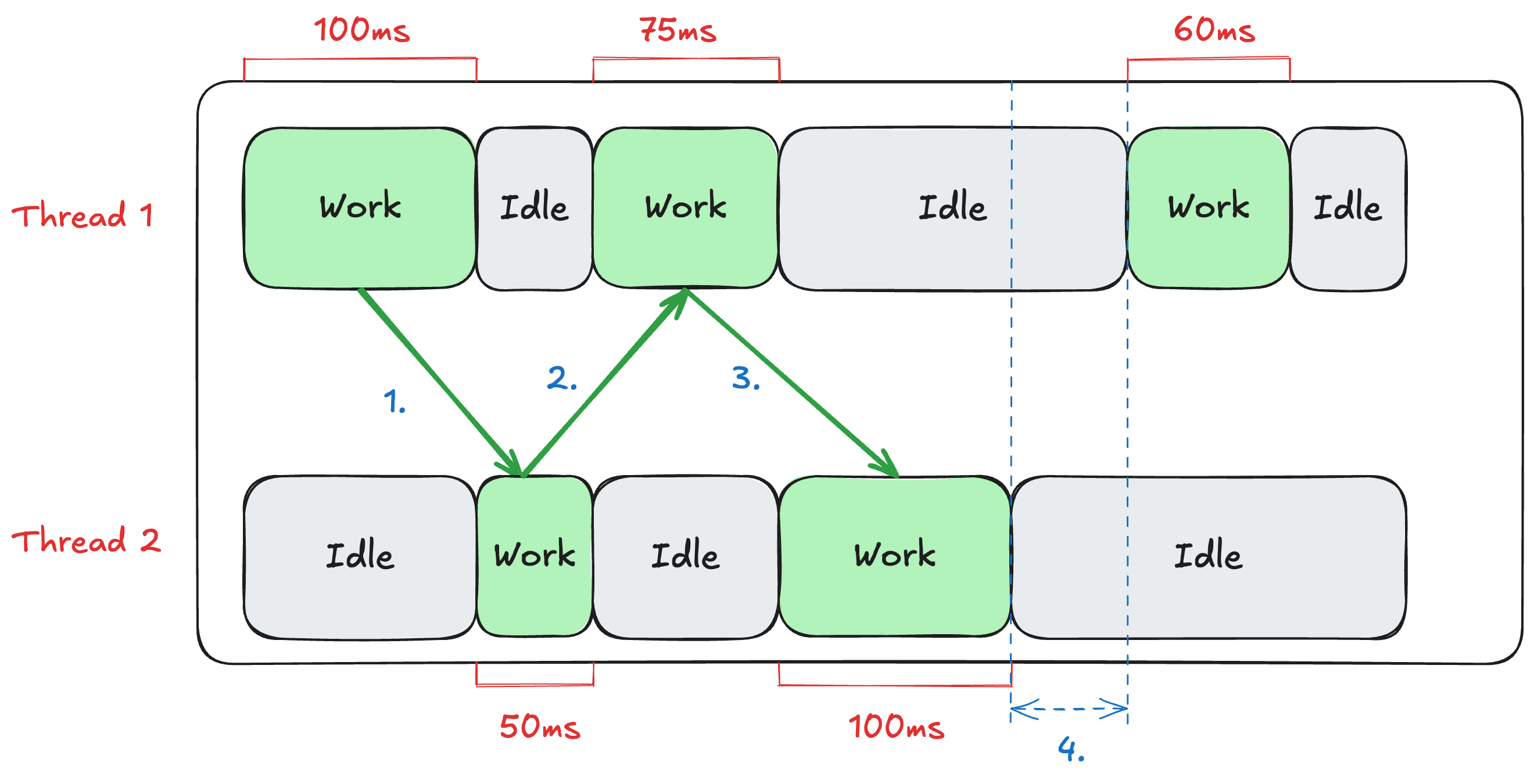

Now let's see how switching between threads happens in a multi-threaded environment. Note that the number of threads is 2 in this case.

As we can see, the CPU switches between Thread 1 and Thread 2 whenever it's idle. This is great. We don't waste CPU cycles now, as we saw in the single-threaded case. But the switching logic is much more nuanced than what is shown in the picture.

Ruby manages multiple threads at two levels: the operating system level and the Ruby level. When we create threads in Ruby, they are "native threads" - meaning they are real threads that the operating system (OS) can see and manage.

All operating systems have a component called the scheduler. In Linux, it's called the Completely Fair Scheduler or CFS. This scheduler decides which thread gets to use the CPU and for how long. However, Ruby adds its own layer of control through the Global VM Lock (GVL).

In Ruby a thread can execute CPU bound code only if it holds the GVL. The Ruby VM makes sure that a thread can hold the GVL for up to 100 milliseconds. After that the thread will be forced to release GVL to another thread if there is another thread waiting to execute CPU bound code. This ensures that the waiting Ruby threads are not starved.

When a thread is executing CPU-bound code, it will continue until either:

- It completes its CPU-bound work.

- It hits an I/O operation (which automatically releases the GVL).

- It reaches the limit of 100ms.

When a thread starts running, the Ruby VM uses a background timer thread at the VM level that checks every 10ms how long the current Ruby thread has been running. If the thread has been running longer than the thread quantum (100ms by default), the Ruby VM takes back the GVL from the active thread and gives it to the next thread waiting in the queue. When a thread gives up the GVL (either voluntarily or is forced to give up), the thread goes to the back of the queue.

The default thread quantum is 100ms and starting from Ruby 3.3, it can be

configured using the RUBY_THREAD_TIMESLICE environment variable.

Here is the link to the discussion.

This environment variable allows fine-tuning of thread scheduling behavior - a

smaller quantum means more frequent thread switches, while a larger quantum

means fewer switches.

Let's see what happens when we have two threads.

- T1 completes quantum limit of 100ms and gives up the GVL to T2.

- T2 completes 50ms of CPU work and voluntarily gives up the GVL to do I/O.

- T1 completes 75 ms of CPU work and voluntarily gives the GVL to do I/O.

- Both T1 and T2 are doing I/O and doesn't want the GVL.

It means that Thread 2 would be a lot faster if it had more access to CPU. To make CPU instantly available, we can have lesser number of threads CPU has to handle. But we need to play a balancing game. If the CPU is idle then we are paying for the processing cost for no reason. If the CPU is extremely busy then that means requests will take longer to process.

Thread switching can lead to misleading data

Let's take a look at a simple code given below. This code is taken from a blog by Jean Boussier.

start = Time.now

database_connection.execute("SELECT ...")

query_duration = (Time.now - start) * 1000.0

puts "Query took: #{query_duration.round(2)}ms"

The code looks simple. If the result is say Query took: 80ms then you would

think that the query actually took 80ms. But now we know two things

- Executing database query is an IO operation (IO bound)

- Once the IO bound operation is done then the thread might not immediately get hold of the GVL to execute CPU bound code.

Think about it. What if the query took only 10ms and the rest of the 70ms

The thread was waiting for the CPU because of the GVL. The only way to know

which portion took how much time is by instrumenting the GVL.

Visualizing the effect of the GVL

To better understand the effect of multiple threads when it comes to Ruby's performance, let's do a quick test. We'll start with a cpu_intensive method that performs pure arithmetic operations in nested loops, creating a workload that is heavily CPU dependent.

Here is the code.

Running this script produced the following output:

Running demonstrations with GVL tracing...

Starting demo with 1 threads doing CPU-bound work

Time elapsed: 7.4921 seconds

Starting demo with 3 threads doing CPU-bound work

Time elapsed: 7.8146 seconds

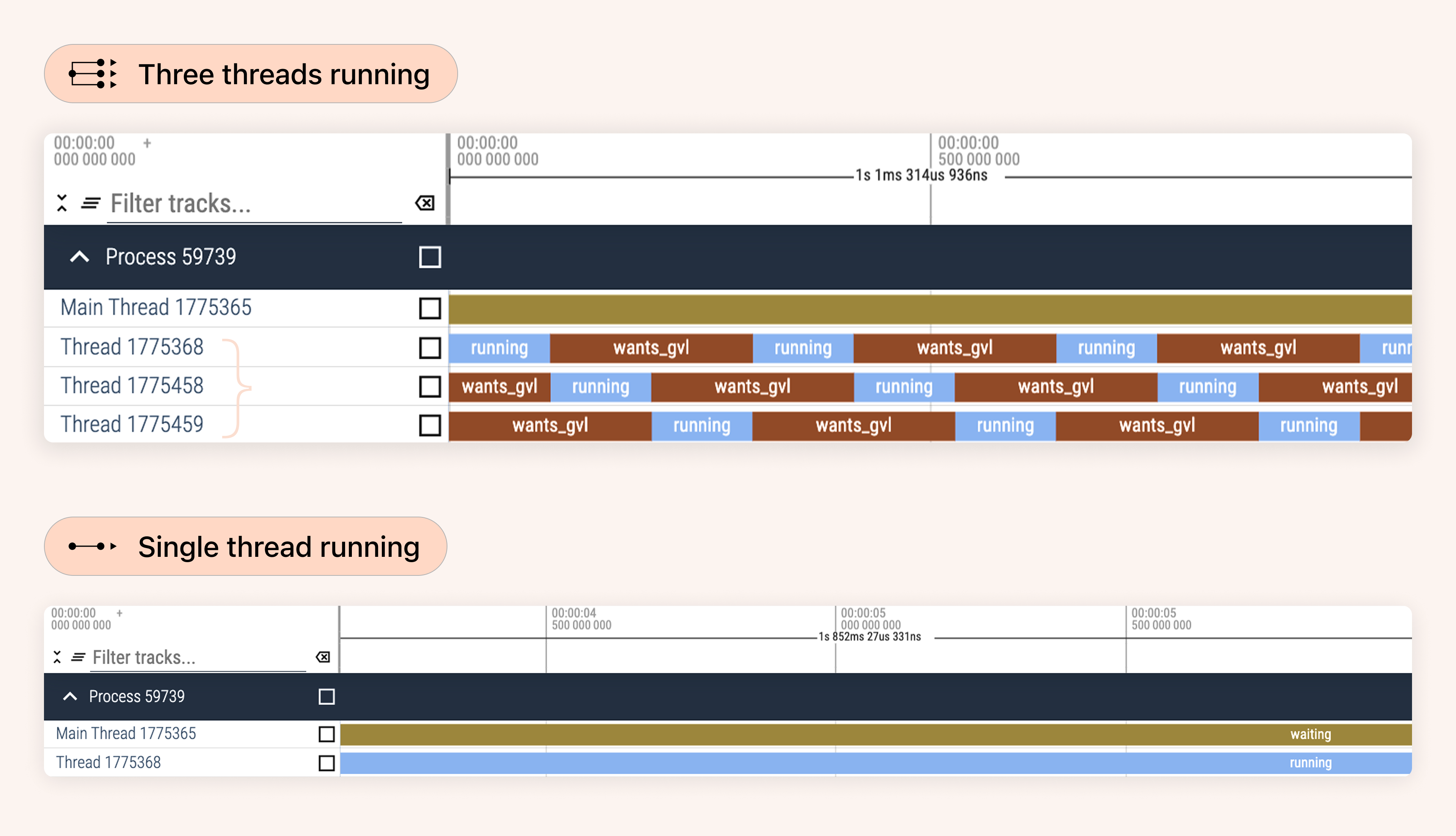

From the output, we can see that for CPU-bound work, a single thread performed better. Why? Let's visualize the result with the help of the traces generated in the above script using the gvl-tracing gem. The trace files can be visualized using Perfetto, which provides a timeline view showing how threads interact with the GVL.

We can see above that in the case of CPU-bound work if we have a single thread then it's not waiting for GVL. However, if we have three threads then each thread is waiting for GVL multiple times.

Understanding the advantage of multiple threads in mixed workloads

Now let's look at mixed workloads in single-threaded and multi-threaded

environment. We'll use a separate script with a mixed_workload method that

combines CPU-bound work with I/O operations. We use IO.select with blocking

behavior to simulate I/O operations. This creates actual I/O blocking that

releases the GVL and shows as "waiting" in the GVL trace, accurately

representing real-world I/O operations like database queries.

Here is the code for the mixed workload test.

Running this script with 1 thread and 3 threads produced the following output:

Running demonstrations with GVL tracing...

Starting demo with 1 thread doing Mixed I/O and CPU work

Time elapsed: 9.32 seconds

Starting demo with 3 threads doing Mixed I/O and CPU work

Time elapsed: 6.1344 seconds

The key advantage of multiple threads in mixed workloads lies in how the GVL is managed during I/O operations. When a thread encounters an I/O operation (like a database query, network call, or file read), it voluntarily releases the GVL. This is fundamentally different from CPU-bound work, where threads compete for the GVL and one thread must wait for another to finish or reach the 100ms quantum limit.

During I/O operations, the thread is essentially blocked waiting for an external resource (database, network, disk). While waiting, the thread doesn't need the GVL because it's not executing Ruby code. This creates an opportunity for other threads to acquire the GVL and do useful CPU work. The result is that CPU cycles that would otherwise be wasted during I/O waits are now being utilized productively by other threads.

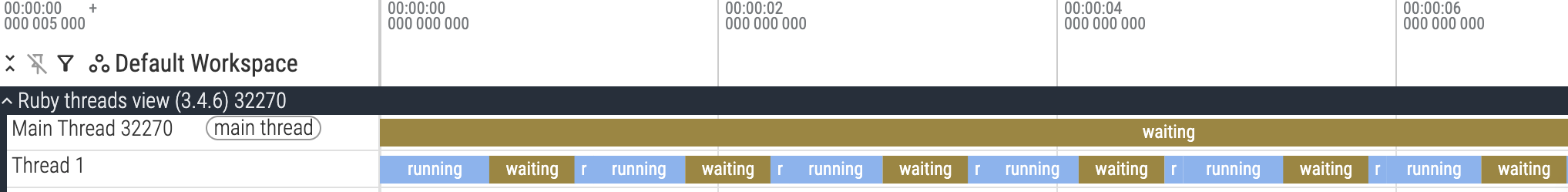

Let's visualize this with the single-threaded case first:

In the single-threaded case, the threads wait for I/O operations to complete. During these I/O waits, the CPU sits idle. The thread performs some CPU work, then waits for I/O, then does more CPU work, then waits for I/O again. During all the I/O wait periods, no productive work is happening. The CPU is available but there's no other thread to utilize it.

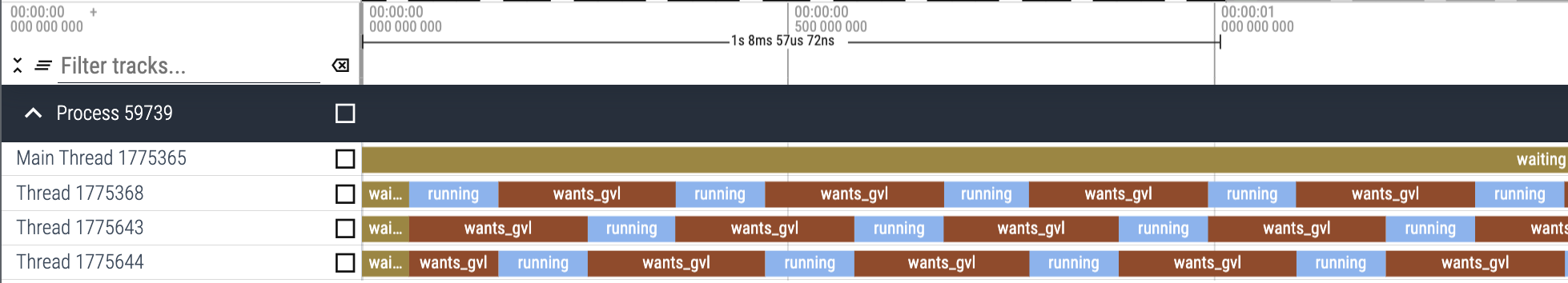

Now let's look at the multi-threaded case with three threads:

When there are three threads, the situation changes a bit. Threads now occasionally spend time waiting for the GVL, but the overall throughput is significantly better.

When Thread 1 releases the GVL to perform I/O, Thread 2 can immediately acquire it and start executing CPU-bound work. While Thread 2 is working, Thread 1 might still be waiting for its I/O operation to complete. Then when Thread 2 releases the GVL for its own I/O operation, Thread 3 can acquire it. This creates a pipeline effect where threads are constantly handing off the GVL to each other ensuring that the CPU is almost always doing useful work.

The small amount of GVL contention we see in the multi-threaded case (threads waiting for GVL) is more than compensated for by the elimination of idle CPU time. Instead of the CPU sitting idle during I/O operations, other threads keep it busy.

This is why Rails applications with typical workloads (lots of database queries, API calls, and other I/O operations) benefit significantly from having multiple threads.

Why can't we increase the thread count to a really high value?

In the previous section, we saw that increasing the number of threads can help in utilizing the CPU better. So why can't we increase the number of threads to a really high value? Let us visualize it.

In the hope of increasing performance, let us bump up the number of threads in

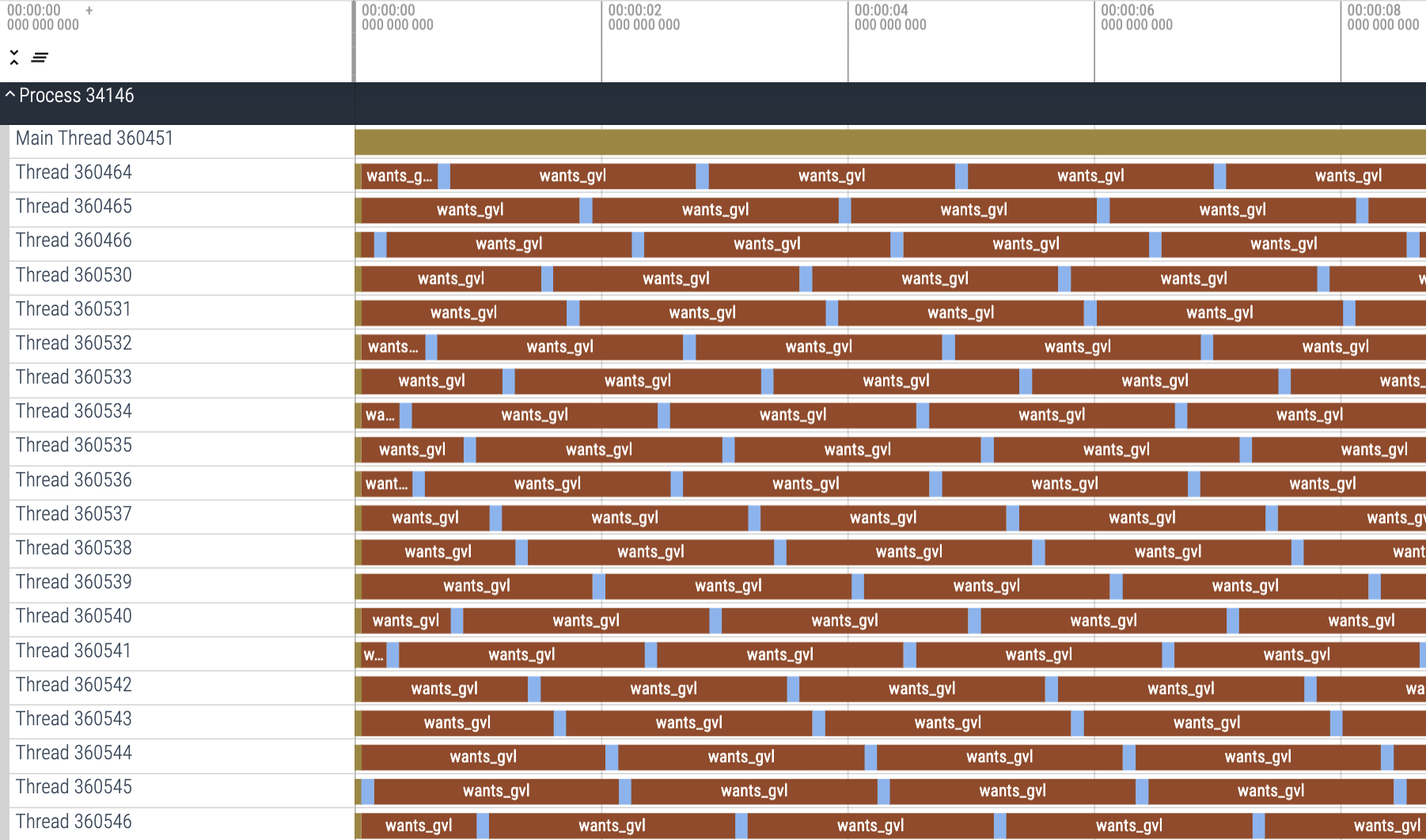

the previous code snippet to 20 and see the gvl-tracing result.

As we can see in the above picture, the amount of GVL contention is massive here. Threads are waiting to get a hold of the GVL. Same will happen inside a Puma process if we increase the number of threads to a very high value. As we know, each request is handled by a thread. GVL contention therefore, means that the requests keep waiting, thereby increasing latency.

What's next

In the coming blogs, we'll see how we can figure out the ideal value for

max_threads, both theoretically and empirically, based on our application's

workload.

This was Part 2 of our blog series on scaling Rails applications. If any part of the blog is not clear to you then please write to us at LinkedIn, Twitter or BigBinary website.

Follow @bigbinary on X. Check out our full blog archive.